Explore Vultr Cloud GPU Variants

Introduction

Vultr offers a wide range of NVIDIA GPU Cloud server options that are applicable in many different scenarios and assist in developing next-generation applications. Commonly, you can develop production-ready Machine Learning and Artificial Intelligence applications on a GPU server depending on your requirements. Below are the 5 NVIDIA GPU options that you can deploy using your Vultr account.

- NVIDIA A100

- NVIDIA A40

- NVIDIA A16

- NVIDIA H100

- NVIDIA L40S

This article explains the available NVIDIA Vultr Cloud GPU options with comparisons of A100, A40, A16, H100 based on real-world use cases.

GPU Specifications and Performance Overview

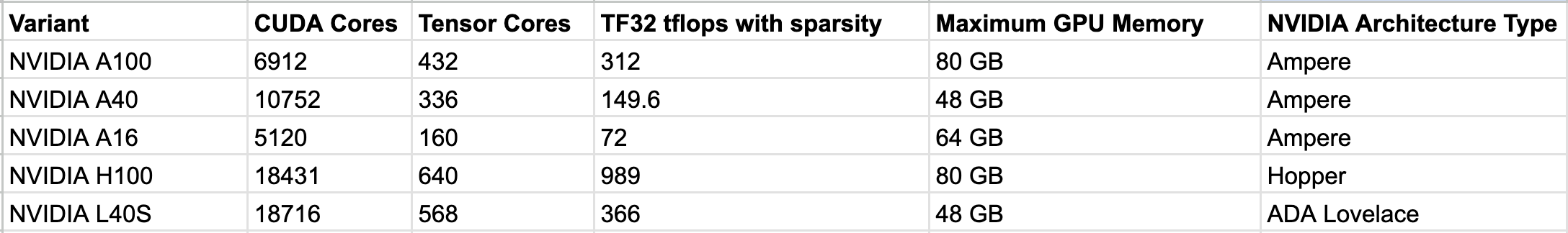

NVIDIA GPU technical and performance data differ based on the CUDA Cores, Tflops performance, and parallel processing capabilities. Below are the specifications, limits, and architecture types of the different Vultr Cloud GPU models:

Key Terms

CUDA Cores: These are specific types of processing units designed to work with the NVIDIA CUDA programming model. They play a fundamental role in parallel processing and speed up various computing tasks focused on graphics rendering. They often use a Single Instruction, Multiple Data (SIMD) architecture so that a single instruction executes simultaneously on multiple data elements which results in-to a high throughput in parallel computing.

Tensor Cores: These are hardware components designed to speed up matrix-based computations commonly used in deep learning and artificial intelligence workloads.

Tensor Coresperform mathematical operations involved in neural network training and inference by taking advantage of their mixed-precision computing. They keep the result accuracy high by using error correction and accumulation.Tflops: Also known as TeraFLOPS, it quantifies the performance of a system in floating-point operations per second, it involves floating-point operations that involve mathematical calculations using numbers with decimal points. It's a useful indicator when comparing the capabilities of different hardware components. High-performance computing applications like simulations heavily rely on

Tflops.

Best-Suited GPU Use Cases

Each NVIDIA Cloud GPU has unique characteristics that make it the best model for a specific use case. Below are the GPU real-world use cases and scenarios, together with how each model's ability can match your needs across a wide variety of computational applications as described below:

NVIDIA A100

The NVIDIA A100 Cloud GPU model best suits the following use cases:

- Deep learning: Applies in the training and inference of deep learning models due to its high computational power. It's also used for tasks like image recognition, natural language processing, and autonomous driving applications

- Scientific Simulations: It runs complex scientific simulations such as weather forecasting, climate modeling, and subjects like physics and chemistry

- Medical Research: The model accelerates tasks related to medical imaging to offer more accurate and faster diagnosis. It also assists in molecular modeling used in drug discovery.

Visit the following resources to implement any of the above use cases on your NVIDIA A100 Vultr Cloud GPU server.

- NVIDIA A100 documentation

- Inference Llama 2 on Vultr Cloud with NVIDIA A100

- How to use Meta Llama 2 Large Language Model (LLM) on a Vultr Cloud GPU Server

- How to use Hugging Face Transformer Models on a Vultr Cloud GPU Server

- AI Generated Images with OpenJourney and Vultr Cloud GPU

NVIDIA A40

The Vultr NVIDIA A40 GPU model best suits the following use cases:

- Virtualization and Cloud Computing: A40 allows swift resource sharing for tasks such as Virtual Desktop Infrastructure (VDI), gaming-as-a-service, and cloud-based rendering.

- Professional Graphics: Handles most demanding graphic tasks in professional applications such as 3D modeling and Computer-Aided Design (CAD). It enables fast processing in high-quality visuals and real-time rendering scenarios

- AI-Powered Analytics: A40 enables fast decision-making with the application of AI and machine learning for heavy data loads. For example, If an organization needs to improve its analytics pipeline, the model allows it to develop an efficient and AI-powered solution for fast decision-making

To implement NVIDIA A40 solutions on your server, visit the following resources:

NVIDIA A16

The Vultr NVIDIA A16 GPU model best suits the following use cases:

- Multimedia Streaming: A16 handles Real-Time Interactivity and Multimedia Streaming. Gaming-focused users can leverage its capability for better responsiveness and low latency which enables a smooth and immersive gaming experience. Platforms can deliver high-quality streams and perform fast video transcoding tasks

- Workplace Virtualization: A16 enables Virtual Application(vApps) that helps in maximizing productivity and performance in comparison to traditional setups. It best handles remote work implementations.

- Virtual Remote Desktops: Remote Virtual desktops perform efficiently and fast using the NVIDIA A16 model. Depending on your setup, you can deploy a Linux virtual desktop server or a Windows Server to make use of the model performance.

- Video Encoding: A16 accelerates resource-intensive video encoding tasks such as converting video formats from one type to another. For example, when converting an

.mp4video to.mov

To implement solutions on your Vultr NVIDIA A16 model, visit the following resources:

- Getting started with NVIDIA A16

- NVIDIA A16 documentation for more extensive data and research.

- How to Use Vultr Broadcaster with Owncast to Livestream

- How to Use Vultr's Broadcaster Marketplace App

- Set up a Transcoding Server with Handbrake and Vultr Cloud GPU

NVIDIA H100 TENSOR CORE

- High Performance Computing: H100 focuses on high performance computing applications. It solves trillion parameter language models, it can speed up the Large Language Models by up to 30 times over previous generations by using NVIDIA Hopper architecture

- Medical Research: It's useful for tasks like genome sequencing and protein simulations using its DPX instruction processing capabilities it is well-suited for such processes

To implement solutions on your NVIDIA H100 Tensor Core instance, visit the following resources:

NVIDIA L40S

- Generative AI: NVIDIA L40S supports generative AI tasks by delivering end-to-end acceleration and helping users develop next-generation AI enabled applications. It handles inference and training in 3D graphics. It's also suitable for deploying and scaling multiple workloads at a go

To leverage the power of your NVIDIA L40S instance, visit the following resources:

Conclusion

In this article, you discovered the core technical differences between the Vultr NVIDIA GPU options. Additionally, the GPU model use cases included common use cases you can adopt for your next solution on a Vultr Cloud GPU server.