Fine-Tuning Llama 2 | Generative AI Series

Introduction

Fine-tuning involves updating an AI model with new data to adapt the model to a specific task. You can fine-tune a model using:

- The retrieval augmented generation (RAG) technique. This method involves tapping real-time data sources like MySQL/PostgreSQL database to generate factual results.

- The labeled dataset technique: This method uses a smaller labeled task-specific dataset to optimize the model's performance.

Consider the Llama 2 language Models (LLMs) with over 70 billion parameters. The model is trained on vast volumes of data to perform different tasks. However, with time, the model's data can become outdated.

To make the LLama 2 model generate relevant results for queries, you can fine-tune the model with either the RAG or labeled dataset techniques. Each method has its pros and cons, as discussed in this article.

In this guide, you'll auto-tune the LLama 2 model with a new ad generation labeled dataset. You'll then push the new model to your Hugging Face account and use it to perform ad-generation tasks using the Vultr cloud GPU server.

Prerequisites

Before you begin:

Deploy a new Ubuntu 22.04 A100 Vultr Cloud GPU Server with at least:

- 80 GB GPU RAM

- 12 vCPUs

- 120 GB Memory

Create a non-root user with

sudorights and switch to the account.Create a Hugging Face user access token with

WRITEpermissions.

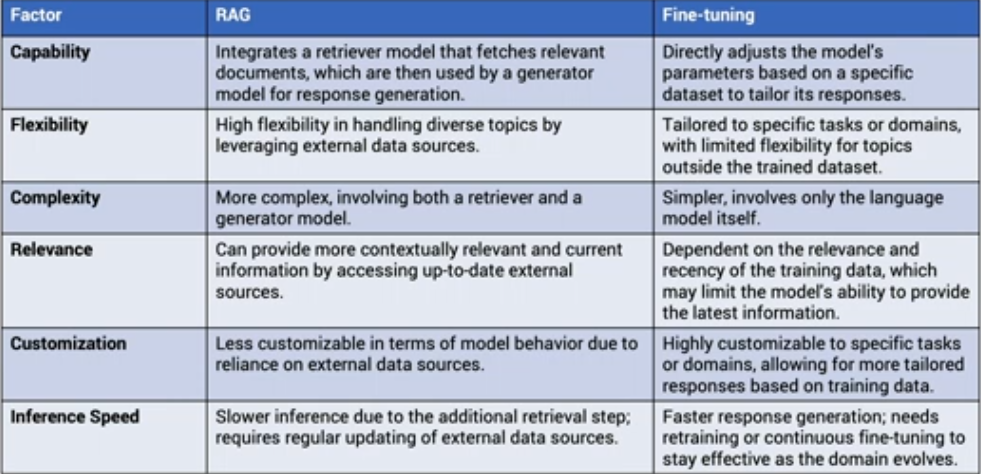

Compare RAG With Labelled Datasets

The RAG technique is more applicable when using structured data while fine-tuning a model using labeled datasets works well with unstructured data. The following table highlights the key differences between the two.

Install the Required Dependencies

To fine-tune and use the LLama 2 model with Python, follow the steps below to install the required libraries:

Install the

huggingface_hubandautotrain-advancedlibraries.console$ pip install huggingface_hub autotrain-advanced

Add the libraries to the system path.

console$ echo "export PATH="`python3 -m site --user-base`/bin:\$PATH"" >> ~/.bashrc source ~/.bashrc

Implement the AutoTrain Tool

The Hugging Face AutoTrain tool allows you to train natural language processing (NLP) models like Llama 2 for specific tasks. The tool accepts different custom parameters in a user-friendly format without spending too much time on the technical details.

In this section, you'll feed an ad copy generation dataset to the AutoTrain tool to fine-tune the meta-llama/Llama-2-7b-chat-hf model. Then, you'll upload the newly trained model to your Hugging Face account and later run the model using a Docker container to retrieve fine-tuned results.

Prepare the New Model's Dataset

The AutoTrain tool accepts the following specific data format for training the Llama 2 model:

<s>[INST] <<SYS>>

{{system message}}

<</SYS>>

{{message}} [/INST] {{answer}} </s>

Hugging Face provides a hub for various datasets that adhere to the above format. You can use these datasets to fine-tune your model. For instance, for this guide, you'll use the ad copy generation labeled dataset to fine-tune your model. Here are sample rows from the dataset.

<s>[INST] <<SYS>> Create a text ad given the following product and description. <</SYS>> Product: Wide-leg trousers Description: Pants with a loose and flowing fit, creating an elongated and sophisticated silhouette. [/INST] Ad: Introducing our Wide-Leg Trousers: Effortlessly chic and endlessly versatile. Discover a flattering, comfortable fit that creates an elegant, elongated silhouette. Elevate your style today! </s>

...

<s>[INST] <<SYS>> Create a text ad given the following product and description. <</SYS>> Product: Electric Food Chopper Description: Chop ingredients effortlessly with an Electric Food Chopper - designed for quick and efficient meal prep. [/INST] Ad: Chop, prep, and conquer your kitchen tasks! 🌶️🌟 Simplify your meal preparation. Perfect for busy cooks and making cooking a breeze with a touch of electric food chopper efficiency! 🌟🍳🍛 </s>

Fine-tune the Llama 2 Model

Follow the steps below to launch and use the AutoTrain tool:

Log in to your Hugging Face account.

console$ huggingface-cli login

Enter your Hugging Face access

tokenand press Enter to proceed. Then, press N and Enter to skip adding your accesstokenas agitcredential.console... Login successful

Initialize the following custom environment variables. Replace

YOUR_HUGGING_FACE_ACCESS_TOKENandYOUR_HUGGING_USERNAMEwith the correct values. Thesmangrul/ad-copy-generationparameter points to the ad copy generation labeled dataset discussed earlier.console$ export model=meta-llama/Llama-2-7b-chat-hf export data_path=smangrul/ad-copy-generation export text_column=content export token=YOUR_HUGGING_FACE_ACCESS_TOKEN export project_name=demo-ads export repoid=YOUR_HUGGING_FACE_USERNAME/ads-llama2-demo

Run the following command to train the

Llama-2-7b-chat-hfmodel with the new dataset. The following command takes several minutes to complete.console$ autotrain llm --train --project_name $project_name --model $model --data_path $data_path --text_column $text_column --use_peft --use_int4 --learning_rate 2e-4 --train_batch_size 2 --num_train_epochs 1 --trainer sft --block_size 1024 --lora_r 16 --lora_alpha 32 --merge-adapter --lora_dropout 0.045 --push-to-hub --token $token --repo-id $repoid

Output:

... > INFO Finished training, saving model... > INFO Merging adapter weights... > INFO Loading adapter... ...Visit your Hugging Face profile page to ensure you've trained and pushed the new model to the repository.

https://huggingface.co/YOUR_HUGGING_FACE_USERNAMEOutput:

Serve the Custom Auto-trained Model

You've fine-tuned and pushed the model to your Hugging Face account. Follow the steps below to run the model in a Docker container:

Initialize the following system variables. Replace

YOUR_HUGGING_FACE_USERNAMEandYOUR_HUGGING_FACE_TOKENwith the correct details.console$ export model=YOUR_HUGGING_FACE_USERNAME/ads-llama2-demo export volume=$PWD/data export token=YOUR_HUGGING_FACE_TOKEN

Run the following Docker command to run a container that serves the new model.

console$ sudo docker run -d --name hf-tgi --runtime=nvidia --gpus all -e HUGGING_FACE_HUB_TOKEN=$token -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:1.1.0 --model-id $model --max-input-length 2048 --max-total-tokens 4096

Output:

1.1.0: Pulling from huggingface/text-generation-inference ... Status: Downloaded newer image for ghcr.io/huggingface/text-generation-inference:1.1.0Check the Docker logs to monitor the container as it loads the new model.

console$ sudo docker logs -f hf-tgi

Output:

... Warming up model Setting max batch total tokens to ..... ...Connected ...Invalid hostname, defaulting to 0.0.0.0Allow port

8890through the firewall. You need this port to run a Jupyter lab instance.console$ sudo ufw allow 8890 $ sudo ufw reload

Run the Jupyter lab instance to retrieve an access

token.console$ jupyter lab --ip 0.0.0.0 --port 8890

Output:

http://YOUR_SERVER_HOST_NAME:8890/lab?token=b7ab2bdscb366edsddssfsff0faeb5fa68b6b0cfAccess the Jupyter Notebook on your browser.

http://YOUR_SERVER_HOST_NAME:8890/lab?token=b7ab2bdscb366edsddssfsff0faeb5fa68b6b0cfClick Python 3 ipykernel under Notebook. Then, enter the following Python code.

pythonfrom huggingface_hub import InferenceClient URI = 'http://127.0.0.1:8080' client = InferenceClient(model = URI) def chat_completion(system_prompt, user_prompt, length = 1000): final_prompt = f"""<s>[INST]<<SYS>> {system_prompt}<</SYS>> {user_prompt} [/INST] """ return client.text_generation(prompt = final_prompt,max_new_tokens = length).strip() system_prompt = """ Create a text ad given the following product and description. """ user_prompt = """ Product: Lightweight Bicycle Description: Perfect bike for any road conditions. this bike comes with durable tires, LCD display, and modern disc brakes """ print(chat_completion(system_prompt, user_prompt))

Run the above Python code to generate an ad based on your input.

Output:

🚴♂️🌟 Experience the thrill of cycling with a Lightweight Bicycle! 🌟🚴♂️ Perfect for road adventures, this bike boasts durable tires, LCD display, and modern disc brakes. Limited stock - ride in style! 🚴♂️🌟

Conclusion

In this guide, you've explored the difference between fine-tuning a model using RAG and labeled datasets to improve the model's accuracy. Then, you've implemented the HuggingFace AutoTrain tool to fine-tune the model with a new dataset. Finally, you've run the fine-tuned model in a Docker container to generate an ad based on a custom product description.