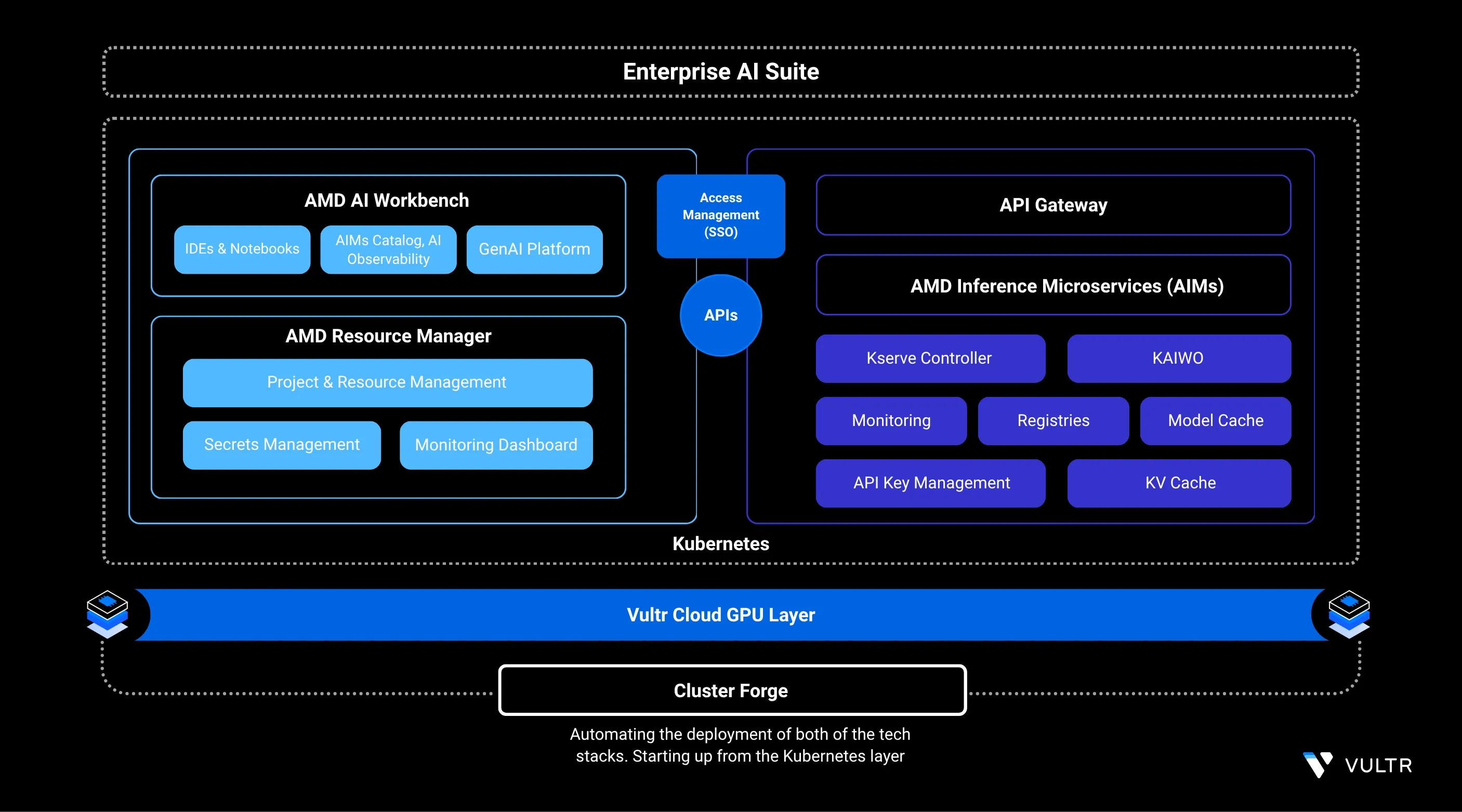

AMD Enterprise AI Suite is a complete platform for building, deploying, and running AI workloads on Kubernetes tuned for AMD hardware. It can be used by system administrators, platform teams, AI researchers, and developers working on AI solutions.

This guide explains all the core components such as AMD AI Workbench, AMD Resource Manager, Kubernetes AI Workload Orchestrator (Kaiwo), Kubernetes Platform, Cluster Forge, and AMD Inference Microservices (AIMs) that are offered for AI compute use, you will also be able to deploy the AI platform using Vultr Cloud GPU Infrastructure and AMD Instinct™ MI300X GPUs.

Prerequisites

Before you begin, ensure you:

- Have access to an AMD Instinct™ MI300X GPU.

- Have a 2TB Block Storage volume attached for workloads.

- Have created a wildcard DNS A record pointing to your server's public IP address.

amd-ai-suite.example.com in this guide with the domain or subdomain you selected for installation.

Key Components of the Platform

AMD AI Workbench: Focuses on simplifying the execution of fine-tuning, inference or other jobs, enabling researchers to manage AI workloads by offering low-code approaches for developing AI applications. With a comprehensive model catalog and integrations with MLops tools such as MLflow, TensorBoard and Kubeflow, AMD AI Workbench allows researchers to use AI development tools in a efficient manner.

AMD Resource Manager: Helps organizations control and optimize how users and teams access GPUs, data, and compute resources. It improves GPU utilization through fair scheduling and shared access, while offering dashboards to monitor usage across projects and departments.

Kubernetes AI Workload Orchestrator (Kaiwo): Enhances GPU efficiency by reducing idle time through intelligent scheduling. It manages AI job placement using a Kubernetes operator and supports features like multiple queues, fair sharing, quotas, and topology-aware scheduling to run workloads more effectively.

Kubernetes Platform: Serves as the core container orchestration layer that powers the deployment, scaling, and management of AI workloads. It provides the flexibility and reliability needed for tasks ranging from training large models to running production inference.

Cluster Forge: Simplifies the setup of a production-ready AI platform by automating the deployment of Kubernetes control and compute planes. It integrates open-source tools and packaged AI workloads, enabling teams using AMD hardware to get started within hours.

AMD Inference Microservices (AIMs): Streamlines the process of serving AI and LLM models by automatically selecting optimal runtime settings based on the model, hardware, and user inputs. Its expanding catalog of prebuilt microservices makes deploying inference workloads fast and efficient.

Deploy AMD Enterprise AI Platform

In this section, you deploy the AMD Enterprise AI Platform using the bloom installer. You download and prepare the Bloom binary, configure the required YAML settings, and launch the browser-based installation interface over a secure SSH tunnel. After reviewing and confirming the configuration inside the installer UI, you trigger the full platform deployment.

Download the official

bloombinary. Visit the GitHub releases page to get the latest version.console$ wget https://github.com/silogen/cluster-bloom/releases/download/v1.2.2/bloom

Make the

bloombinary executable.console$ chmod +x bloom

Create the Bloom configuration file.

console$ nano bloom.yaml

Add the following content to the file. Replace

amd-ai-suite.example.comwith your domain and/dev/vdb1with the block device attached to your server. Visit the ClusterForge GitHub release page to download the latest release package.yamlDOMAIN: amd-ai-suite.example.com OIDC_URL: https://kc.amd-ai-suite.example.com/realms/airm FIRST_NODE: true GPU_NODE: true CERT_OPTION: generate USE_CERT_MANAGER: true CLUSTER_DISKS: /dev/vdb1 CLUSTERFORGE_RELEASE: https://github.com/silogen/cluster-forge/releases/download/v1.5.2/release-enterprise-ai-v1.5.2.tar.gz NO_DISKS_FOR_CLUSTER: false

In the above configuration:

- DOMAIN: Sets the base domain the platform uses.

- OIDC_URL: Defines the Keycloak authentication URL for the airm realm.

- FIRST_NODE: Marks this server as the initial node in the cluster.

- GPU_NODE: Enables GPU capabilities for this node.

- CERT_OPTION: Defines how certificates are created (

generate= auto-generate). - USE_CERT_MANAGER: Enables Cert-Manager for managing TLS certificates.

- CLUSTER_DISKS: The disk or partition the cluster uses for storage.

- CLUSTERFORGE_RELEASE: URL of the ClusterForge package required for installation.

- NO_DISKS_FOR_CLUSTER: Indicates whether the cluster should run without disks (

false= use the disk listed above).

Start the installation process.

console$ sudo ./bloom --config bloom.yaml

This command launches a local installation interface at

http://127.0.0.1:62078.Open an SSH tunnel to access the installation UI.

console$ ssh -L 62078:127.0.0.1:62078 USERNAME@SERVER-IP

Replace

USERNAMEwith your server username andSERVER-IPwith your server's public IP.Open the installation UI in your browser.

http://localhost:62078Follow the instructions in the web interface and review any configuration options that require changes.

After you finalize the configuration, click Generate Configuration & Start Installation to begin the deployment.

The deployment usually takes 20 minutes to finish.Note

Configure SSL and Access the Resource Manager UI

In this section, you configure a wildcard Let's Encrypt SSL certificate so you can access the Resource Manager UI securely. The UI enforces HTTPS, and without a valid TLS certificate, you cannot open the interface.

You can use either of the following SSL methods:

A Let's Encrypt wildcard SSL certificate for your domain.

A SAN-based SSL certificate that includes these subdomains:

- airmui.amd-ai-suite.example.com

- airmapi.amd-ai-suite.example.com

- argocd.amd-ai-suite.example.com

- gitea.amd-ai-suite.example.com

- kc.amd-ai-suite.example.com

- longhorn.amd-ai-suite.example.com

- minio.amd-ai-suite.example.com

- openbao.amd-ai-suite.example.com

Replace

amd-ai-suite.example.comwith the domain or subdomain you selected for your installation.

Generate a Wildcard Let's Encrypt SSL Certificate

In this section, you generate a Let's Encrypt wildcard SSL certificate for your domain using certbot.

Update your package index and install certbot.

console$ sudo apt update && sudo apt install certbot -y

Generate a wildcard certificate.

console$ sudo certbot certonly --manual --preferred-challenges dns -d '*.amd-ai-suite.example.com'

Creating a wildcard SSL certificate requires domain ownership verification. Certbot can automate this process using DNS plugins, but only for supported DNS providers. TheNote--manualmethod works with any DNS provider, but requires you to create aTXTrecord manually.Certbot displays output similar to:

Please deploy a DNS TXT record under the name: _acme-challenge.amd-ai-suite.example.com. with the following value: zyuf8RXatvvwgPFH-gqj.......................From the output, copy the record name and value, then open your DNS panel and create a

TXTrecord using those values.After the DNS record propagates, press ENTER to continue domain validation.

Output:

Successfully received certificate. Certificate is saved at: /etc/letsencrypt/live/amd-ai-suite.example.com/fullchain.pem Key is saved at: /etc/letsencrypt/live/amd-ai-suite.example.com/privkey.pemFrom the output, note the certificate paths for the later use.

Create a TLS Secret and Access the Resource Manager

In this section, you create a Kubernetes TLS secret that the HTTPS gateway uses to serve your wildcard certificate. After applying the certificate and restarting the gateway, you access the AMD Enterprise AI Resource Manager UI and sign in using the default credentials to complete the initial login process.

Create the

TLSsecret.console$ kubectl create secret tls cluster-tls \ -n kgateway-system \ --key /etc/letsencrypt/live/amd-ai-suite.example.com/privkey.pem \ --cert /etc/letsencrypt/live/amd-ai-suite.example.com/fullchain.pem

Ensure your user has permission to read the certificate files. If not, prependNotesudoto the command. If you run the command withsudo, ensure the root user has access to Kubernetes credentials (via/root/.kube/configor system-wide configuration).Verify that Kubernetes created the secret successfully.

console$ kubectl get secret/cluster-tls -n kgateway-system

You should see the

cluster-tlssecret listed in the output.Restart the HTTPS gateway so it loads the new certificate.

console$ kubectl rollout restart deployment/https -n kgateway-system

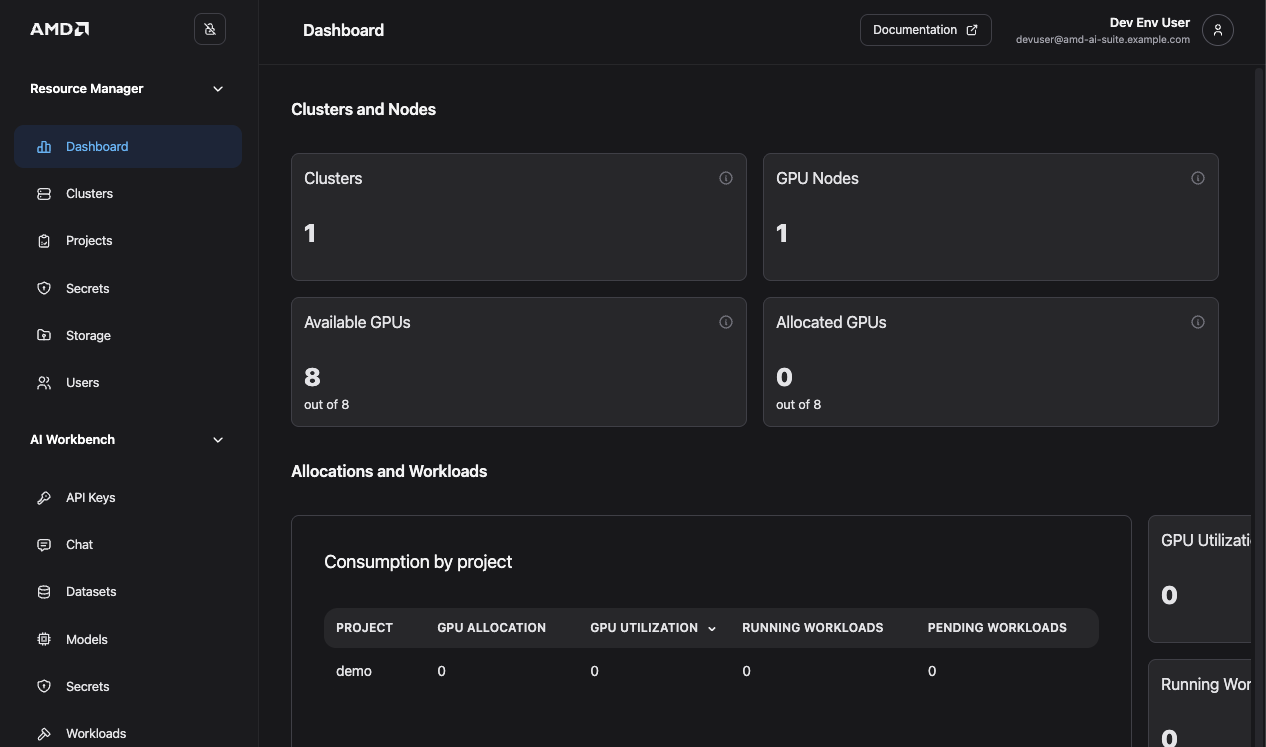

After the restart completes, access the AMD Enterprise AI Resource Manager dashboard using the url below:

https://airmui.amd-ai-suite.example.comClick Sign in with Keycloak. It redirects you to the Keycloak login page.

Enter the following default credentials. Replace

amd-ai-suite.example.comwith the domain you configured.- Username:

devuser@amd-ai-suite.example.com - Password:

password

After you log in, the system prompts you to reset the default password. Enter a strong alphanumeric password twice to secure your account.

- Username:

After you reset the password, the dashboard loads and displays the AMD Resource Manager interface.

Key Feature of the Platform

- Optimized GPU utilization and lower operational costs: Intelligent scheduling maximizes GPU usage, reduces waste, and lowers overall compute costs.

- Unified AI infrastructure: Brings all AI tools and environments together into a single, consistent platform for easier collaboration and governance.

- Accelerated Time-to-Production: Built-in microservices and streamlined workflows help teams move AI models into production faster.

- AI-native workload orchestration: Purpose-built scheduling and inference services ensure efficient, high-performance execution of AI workloads on AMD Instinct™ GPUs.

Conclusion

By following this guide, you deployed the AMD Enterprise AI Platform on Vultr using AMD Instinct™ GPUs and the Bloom installer. You also explored the platform's core components AI Workbench, Resource Manager, Kaiwo, Cluster Forge, and AIMs and learned how they work together to deliver a unified, scalable, and high-performance AI infrastructure.