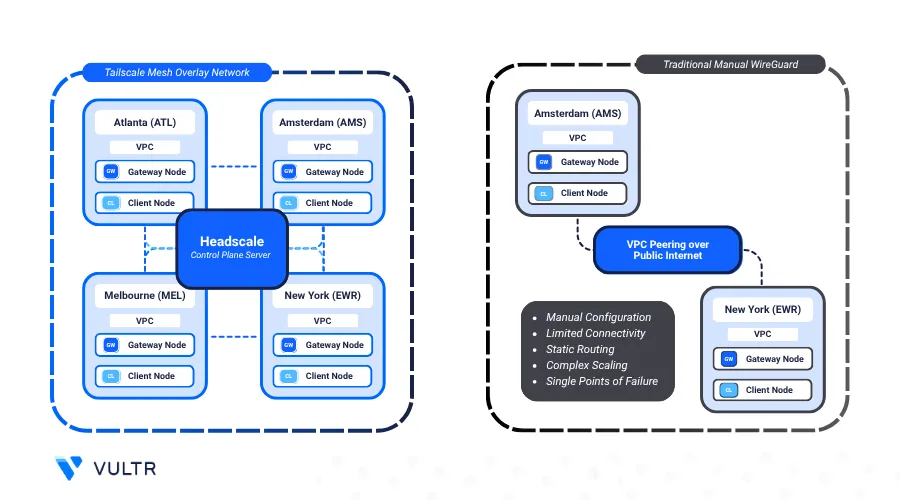

Headscale is an open source and self-hosted implementation of the Tailscale control server. Tailscale is a mesh VPN (Virtual Private Network) service that streamlines connecting devices securely across different networks. It enables encrypted point-to-point connections using the WireGuard protocol, which means only devices on the private network can communicate with each other.

Tailscale creates a peer-to-peer mesh network unlike traditional VPN implementations that tunnels all network traffic through a central gateway server. This allows seamless private connection between Vultr regions with minimal configuration.

This guide provides step-by-step instructions to establish private connection between Vultr regions using Headscale. This implementation is based on Terraform and Ansible to abstract out the manual steps for convenience.

Prerequisites

Before you begin, you need to:

- Have access to a Linux-based instance as a non-root user with sudo privileges.

- Install Terraform CLI.

- Install Ansible CLI.

- Install Git CLI.

Peer Vultr Regions Using Headscale

Tailscale with Headscale allows you to self-host a mesh VPN that enables you to connect from on-prem to cloud, site to site, cloud to cloud, and so on. This guide aims to provide instructions to establish a site to site mesh, where each site is a Gateway Node in a distinct Vultr region connected to a Virtual Private Cloud (VPC) to expose Client Nodes in each datacenter with the others over a private network.

Components

Headscale Control Server: Self-hosted control server managing Tailscale nodes and coordinating secure connections.

Virtual Private Cloud: Isolated network in each Vultr region to establish a private connection between gateway and client nodes.

Gateway Node: A

tailnetpeer or Tailscale client in each Vultr region to join the mesh network across multiple datacenters.Client Nodes: Represents example service in each Vultr region. For example, a database server in Bangalore that serves as a backend to a REST API server in Paris.

FRRouting: Propagates

tailnetroutes from gateway to client nodes for cross datacenter service connectivity.

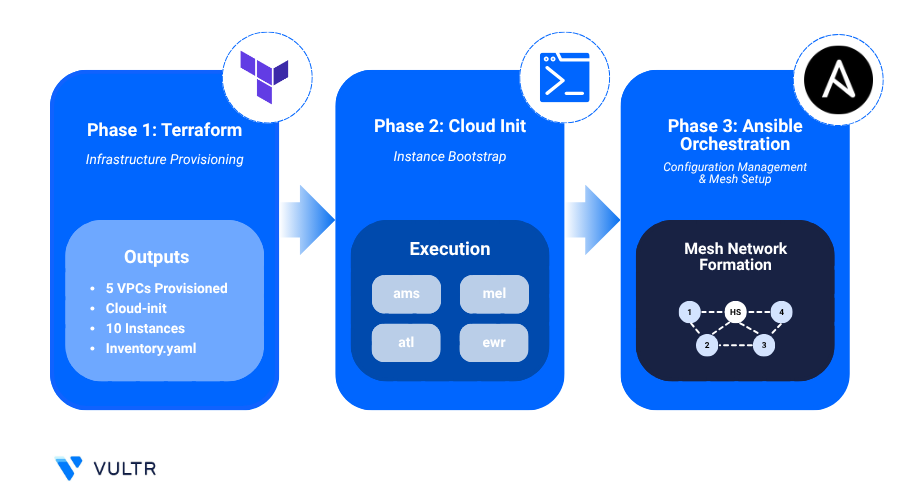

Automation With Terraform, Ansible, and Cloud-Init

This implementation is automated using Terraform, Ansible, and Cloud-Init enabling consistent, repeatable deployments across Vultr regions with minimal configuration changes.

Terraform: Defines the entire infrastructure including VPC, gateway/client nodes, control server and so on.

Ansible: Automates post-provisioning tasks, including configuring control server, gateway/client nodes and setting up routing rules.

Cloud-Init: Installs Tailscale on first boot, joins the Headscale coordination node, and configures FRR to enable dynamic route propagation across the VPC in each Vultr region.

Startup Script: Reusable script for Ubuntu or RHEL that automates installation and mesh onboarding, simplifying scaling across regions. Useful for manual enrolling a new peer from a different region.

Provision a Private Network Mesh Between Vultr Regions

This section encloses the steps to provision the required infrastructure using Terraform manifests and configure the instances post-deployment using Ansible Playbook provides in the code-samples GitHub repository.

The following are the regions selected for peering in this example.

- Atlanta (

atl) - Melbourne (

mel) - Bangalore (

blr) - New York (

ewr) - Amsterdam (

ams).

You may add or remove regions based on your requirements.

Clone the GitHub repository.

console$ git clone https://github.com/vultr-marketing/code-samples.git $ cd code-samples/vpc-peering/vultr-regions

Edit the

terraform.tfvarsfile.console$ nano terraform.tfvars

This file is pre-populated with Headscale region set to Amsterdam, instance plan of a Vultr Optimized Cloud Compute - CPU Optimized offering, and Tailscale instances in 5 regions. You may commit the configuration changes according to your requirement.

You must populate the Vultr API Key for provisioning of cloud resources.

hclvultr_api_key = "YOUR_API_KEY" headscale_region = "ams" user_scheme = "limited" instance_plan = "voc-c-2c-4gb-75s-amd" tailscale_instances = [ { region = "atl" subnet = "10.2.1.0" subnet_mask = 24 }, ... { region = "ams" subnet = "10.2.5.0" subnet_mask = 24 } ]

The following command uses cURL to fetch all Vultr regions along with their identifiers using the public API.Noteconsole$ curl -X GET -s https://api.vultr.com/v2/regions | jq '.regions[] | "\(.id) - \(.city), \(.country)"'

Initialize the Terraform working directory.

console$ terraform init

Review the execution plan.

console$ terraform plan

Apply the manifests.

console$ terraform apply

Output:

Do you want to perform these actions? Terraform will perform the actions described above. Only 'yes' will be accepted to approve. Enter a value:When prompted, type yes to approve and apply the configuration. It may take up to 15 minutes to complete the provisioning.

After the provisioning is completed, you'll see an output similar to the one shown below.

client_summary = { "ams" = { "private_ip" = "10.2.5.4" "public_ip" = "192.0.1.2" "subnet" = "10.2.5.0/24" } ... "atl" = { "private_ip" = "10.2.1.4" "public_ip" = "192.0.1.3" "subnet" = "10.2.1.0/24" } } headscale_ip = "192.0.1.1" summary = { "ams" = { "private_ip" = "10.2.5.3" "public_ip" = "192.0.1.4" "subnet" = "10.2.5.0/24" } ... "atl" = { "private_ip" = "10.2.1.3" "public_ip" = "192.0.1.5" "subnet" = "10.2.1.0/24" } }Navigate to the Ansible directory.

console$ cd ansible

A newNoteinventory.ymlfile is created in this directory. It is auto-generated from the Terraform output and lists the public IPs of all the gateway nodes for Ansible configuration tasks.Run the Ansible Playbook to configure the private connection mesh.

console$ ansible-playbook playbook.yml

This playbook uses the generated inventory.yml to identify instances and configures the Headscale and Tailscale nodes, creating a fully connected private mesh network.

To manually add more clients to the mesh network through VPC, select the VPC network and Startup Script namedNoteheadscale-mesh-init-scriptwhile provisioning a new instance. The script currently supports only Debian and RHEL based distributions. For other distributions, you can manually configure FRR or add static routes to integrate them into the private mesh network.

Test and Benchmark the Private Network Mesh

After deployment, verify that all regions are connected through the WireGuard-based mesh managed by Headscale. Use Ansible to benchmark both private and public paths, measuring latency, bandwidth, and overall mesh performance.

Run the provided Ansible playbook to execute

iperf3andpingtests between regions using both private and public IPs.console$ ansible-playbook network_tests.yml

The network tests may take 5-10 minutes to complete, depending on the number of regions being tested.NoteAfter completion, the ansible playbook creates a

network_test_results/directory which contains the following results files:network_results.txt: Merged output of all tests, including latency and bandwidth over public and private paths.public_results_table.txt: Tabular summary of region-to-region performance over public internet.private_results_table.txt: Tabular summary of private mesh performance via Headscale.

Review the test results for the public internet performance between nodes.

console$ cat network_test_results/public_results_table.txt

Your output should be similar to the one below:

| From | To | Download (Mbps)| Upload (Mbps) | Latency (ms) | |------|-----|----------------|---------------|--------------| | ams | atl | 1626 | 1015 | 98.444 | | atl | ams | 1615 | 1629 | 98.380 | ...Review the Private IP test results to validate the performance over the Tailscale mesh.

console$ cat network_test_results/private_results_table.txt

Your output should be similar to the one below:

| From | To | Download (Mbps)| Upload (Mbps) | Latency (ms) | |------|-----|----------------|---------------|--------------| | ams | atl | 142 | 210 | 100.408 | | atl | ams | 243 | 140 | 100.480 | ...Public tests usually show higher speeds due to direct internet routing and optimized infrastructure. Private mesh tests use encrypted WireGuard tunnels, which add overhead and may reduce throughput.Note

Conclusion

In conclusion, this guide demonstrates a powerful, automated method for creating a secure, multi-region private network on Vultr using Headscale. By leveraging Terraform and Ansible, you can seamlessly connect distributed services across the globe, establishing a performant and scalable mesh VPN that serves as a robust alternative to traditional networking solutions.