How to Migrate Amazon Elastic Block Store (EBS) Volumes to Vultr Block Storage

Migrating block storage volumes between cloud providers requires a structured approach to prevent data loss and ensure a smooth transition. Vultr Block Storage is a high-performance storage solution that offers flexible storage options for various workloads. Amazon Elastic Block Store (EBS) volumes are storage disks attached to Elastic Compute Cloud (EC2) instances, whereas Vultr Block Storage integrates with Vultr Cloud Compute instances. You can leverage the attached instance to transfer data to a Vultr Block Storage volume of an equal or greater size.

Follow this guide to migrate Amazon Elastic Block Store (EBS) volumes to Vultr Block Storage with little or no downtime for your linked applications.

Prerequisites

Before you begin, you need to:

- Have access to a Linux-based Amazon EC2 instance to use for migration.

- Have an existing Amazon Elastic Block Store volume to migrate and attach it to your EC2 instance.

In a single Vultr location:

- Provision Vultr Block Storage volume to use as the destination volume.

- Provision an Ubuntu Vultr Cloud Compute instance and access it as a non-root sudo user.

Set Up the Migration Environment

Follow the steps below to set up the migration environment by preparing the source Amazon EC2 instance and the destination Vultr Cloud Compute instance. Verify that an Elastic Block Store (EBS) volume is attached to the source instance and a Vultr Block Storage volume is attached to the destination instance. This ensures both volumes are ready for data transfer.

Set Up the Source Amazon EBS Volume

Follow the steps below to access the source EC2 instance and mount the EBS volume if it's not already mounted.

Access your EC2 instance using SSH as a non-root user with sudo privileges.

console$ ssh -i ec2-instance.pem user@ec2-ip-address

Replace

userwith your username andec2-ip-addresswith the public IP address of the EC2 instance.List all attached devices and verify the block storage volume name.

console$ lsblkYour output should be similar to the one below:

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS loop0 7:0 0 26.3M 1 loop /snap/amazon-ssm-agent/9881 loop1 7:1 0 73.9M 1 loop /snap/core22/1722 loop2 7:2 0 73.9M 1 loop /snap/core22/1748 loop3 7:3 0 44.4M 1 loop /snap/snapd/23545 xvda 202:0 0 8G 0 disk ├─xvda1 202:1 0 7G 0 part / ├─xvda14 202:14 0 4M 0 part ├─xvda15 202:15 0 106M 0 part /boot/efi └─xvda16 259:0 0 913M 0 part /boot xvdb 202:80 0 8G 0 disk /mnt/data_volumeThe above output shows

xvdaas the root volume andxvdbas the attached EBS volume, which has a size of 8GB and mounted at/mnt/data_volume. Amazon EBS volumes follow thexvd*naming convention, incrementing with each additional volume, such asxvdcdepending on the attached volume.Create a new

/mnt/ebsdirectory to mount the EBS volume if it's not mounted.console$ sudo mkdir /mnt/ebs

Mount the EBS volume

/dev/xvdbto the newly created/mnt/ebsdirectory.console$ sudo mount /dev/xvdb /mnt/ebs

Verify the filesystem information of the attached block devices.

console$ lsblk -f

Your output should be similar to the one below:

NAME FSTYPE FSVER LABEL UUID FSAVAIL FSUSE% MOUNTPOINTS loop0 0 100% /snap/amazon-ssm-agent/9881 loop1 0 100% /snap/core22/1722 loop2 0 100% /snap/core22/1748 loop3 0 100% /snap/snapd/23545 xvda ├─xvda1 ext4 1.0 cloudimg-rootfs 104656f3-cbcd-4c1a-bbe2-5bb510be86c0 4.4G 34% / ├─xvda14 ├─xvda15 vfat FAT32 UEFI 534B-8AD0 98.2M 6% /boot/efi └─xvda16 ext4 1.0 BOOT 91942573-b0ea-4e42-8692-2a195de77cba 743.5M 9% /boot xvdb ext4 1.0 586003ea-c9a2-493e-937d-f9e02ad90877 7.4G 0% /mnt/ebs /mnt/data_volumeext4is the active filesystem format for the/dev/xvdbEBS volume mounted with two directories/mnt/data_volumeand/mnt/ebsbased on the above output. Keep note of the volume's filesystem format to use on your destination Vultr Block Storage volume.Install the Access Control Lists (ACLs) to effectively manage user permissions.

Install ACL on Debian-based systems (Ubuntu, Debian):

console$ sudo apt install acl -y

RHEL-based systems (CentOS, Rocky Linux, AlmaLinux):

console$ sudo dnf install acl -y

Grant your user access to the

/mnt/ebsdirectory using ACLs.console$ sudo setfacl -m u:user:r-- -R /mnt/ebs

The above commands grants the

userread only access on the/mnt/ebsdirectory where the EBS volume is mounted. Replaceuserwith your actual username.View the disk usage information and verify the volume's usage ratio.

console$ df -h

Your output should be similar to the one below:

Filesystem Size Used Avail Use% Mounted on /dev/root 6.8G 2.3G 4.5G 35% / tmpfs 479M 0 479M 0% /dev/shm tmpfs 192M 944K 191M 1% /run tmpfs 5.0M 0 5.0M 0% /run/lock /dev/xvda16 881M 76M 744M 10% /boot /dev/xvda15 105M 6.1M 99M 6% /boot/efi tmpfs 96M 12K 96M 1% /run/user/1000 /dev/xvdb 7.8G 24K 7.4G 1% /mnt/ebsThe source

/dev/xvdbblock storage volume is mounted on the/mnt/ebsdirectory with a1%usage based on the above output.If your EBS volume has multiple partitions, verify each partition's size and filesystem type to configure the destination Vultr Block Storage volume.Note

Set Up the Destination Vultr Block Storage Volume

Follow the steps below to set up the destination Vultr Block Storage volume and mount it to the Vultr Cloud Compute instance to begin with the data transfer.

Log in to the Vultr Customer Portal.

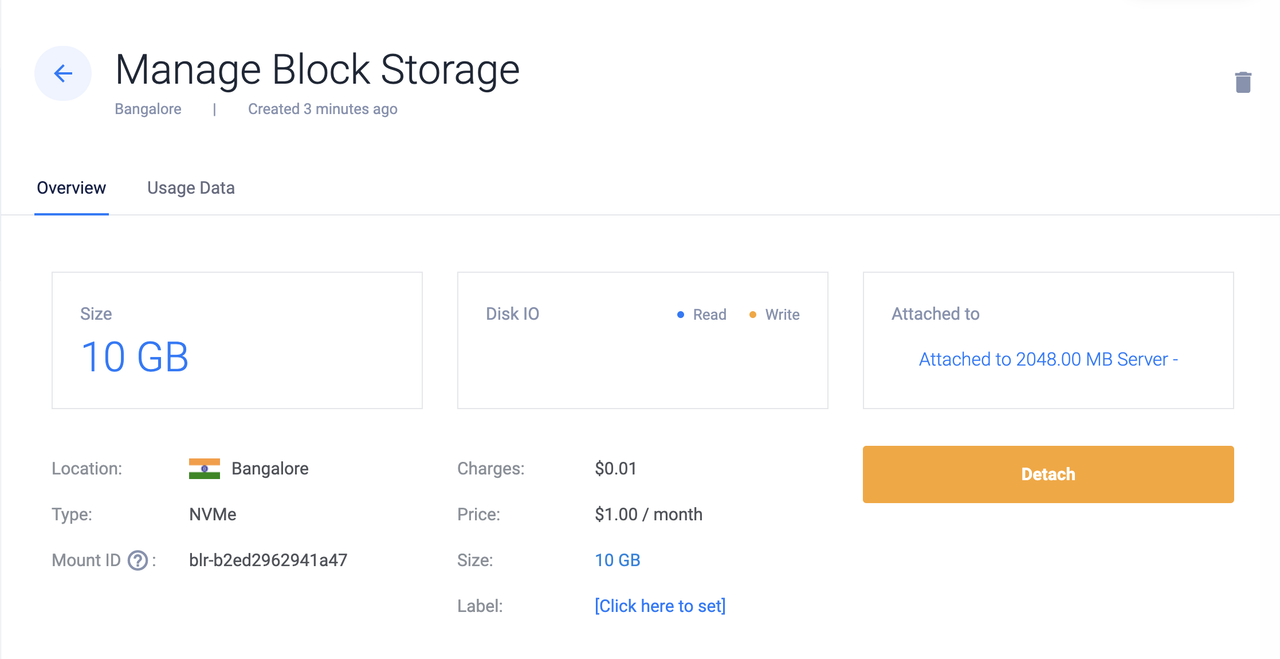

Navigate to the Vultr Block Storage volume's management page and verify that it's attached to your target Vultr Cloud Compute instance.

Access the Vultr Cloud Compute instance using SSH as a non-root sudo user. Replace

Vultr-ip-addresswith the actual public IP of your Vultr instance.console$ ssh linuxuser@Vultr-ip-address

List all attached devices and verify that the new Vultr Block Storage volume displays.

console$ lsblkYour output should be similar to the one below:

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS sr0 11:0 1 1024M 0 rom vda 253:0 0 25G 0 disk ├─vda1 253:1 0 512M 0 part /boot/efi └─vda2 253:2 0 24.5G 0 part / vdb 253:16 0 10G 0 diskThe output shows

vdaas the root volume andvdbas the attached Vultr Block Storage volume with a size of 10GB. Vultr Block Storage volumes use thevdnaming scheme, and the disk value increments when additional volumes are attached. For example,vdb,vdc,vdd, andvdebased on the number of attached volumes.Format the

/dev/vdbVultr Block Storage volume with the source's filesystem type, such asext4.console$ sudo mkfs.ext4 /dev/vdb

Create a new

/mnt/blockstoragedirectory to mount the Vultr Block Storage volume.console$ sudo mkdir /mnt/blockstorage

Mount the

/dev/vdbVultr Block Storage volume to the newly created directory.console$ sudo mount /dev/vdb /mnt/blockstorage

Grant your user full permissions to the

/mnt/blockstoragedirectory.console$ sudo chown -R linuxuser:linuxuser /mnt/blockstorage

View the disk usage information and verify that the volume is mounted.

console$ df -h

Your output should be similar to the one below:

Filesystem Size Used Avail Use% Mounted on /dev/vda2 25G 1.1G 23G 5% / /dev/vdb 10G 33M 9.5G 1% /mnt/blockstorageThe above output shows that the Vultr Block Storage volume

/dev/vdbis mounted at/mnt/blockstorage.If the source EBS volume has multiple partitions, then configure your destination Vultr Block Storage volume with same filesystem format and ensure each partition has enough space for the data migration.Note

Configure SSH for Data Transfer

Follow the steps below to set up SSH key-based authentication to securely transfer data between your source and destination instance without requiring a password. This method enhances security and simplifies the migration process.

Access the source instance using SSH as a non-root user with sudo privileges. Replace

userandec2-ip-addresswith your actual credentials.console$ ssh -i ec2-instance.pem user@ec2-ip-address

Create a new SSH key pair using the

ed25519algorithm to authenticate with your Vultr Cloud Compute instance.console$ ssh-keygen -t ed25519 -f ~/.ssh/vultr_migration -C "vultr_migration"

This command generates two files:

~/.ssh/vultr_migration- The private key (keep this secure).~/.ssh/vultr_migration.pub- The public key (to be added to the Vultr instance).

When prompted for a passphrase, press Return twice to leave it empty unless additional security is required.

Add the SSH public key to the destination Vultr instance to enable key-based authentication. Replace

Vultr-ip-addresswith the public IP of your Vultr instance.console$ ssh-copy-id -i ~/.ssh/vultr_migration.pub linuxuser@Vultr-ip-address

This command adds the public key to the

~/.ssh/authorized_keysfile on the Vultr instance, allowing secure access without a password. When prompted, enter "yes" to confirm the connection and then enter your Vultr instance password.Test the SSH connection to the Vultr instance using the new SSH key. Replace

Vultr-ip-addresswith the public IP of your Vultr instance.console$ ssh -i ~/.ssh/vultr_migration linuxuser@Vultr-ip-address

If the connection is successful, you should see an output similar to the one below:

Welcome to Ubuntu 24.04.1 LTS (GNU/Linux 6.11.0-13-generic x86_64) ... linuxuser@vultr:~$This confirms that authentication is working without requiring a password.

After verifying the connection, exit the session to go back to the EC2 instance.

console$ exit

Migrate the Amazon EBS Volume to Vultr Block Storage

Amazon Elastic Block Store (EBS) volumes store persistent data in AWS EC2 instances, but migrating to Vultr Block Storage offers cost savings, flexibility, and better integration with Vultr infrastructure. Rclone is an open-source command-line tool that is used to manage files on cloud storage and helps in simplifying the migration process by securely transferring data between cloud storage providers.

Access the source instance using SSH as a non-root user with sudo privileges. Replace

userandSERVER_IPwith your actual credentials.console$ ssh -i ec2-instance.pem user@ec2-ip-address

Download the latest Rclone installation script.

console$ wget -O rclone.sh https://rclone.org/install.sh

Run the script

rclone.shusingbashto install Rclone.console$ sudo bash rclone.sh

View the installed Rclone version.

console$ rclone version

Your output should be similar to the one below:

rclone v1.69.0 os/version: ubuntu 24.04 (64 bit) os/kernel: 6.8.0-1021-aws (x86_64) os/type: linux os/arch: amd64 go/version: go1.23.4 go/linking: static go/tags: noneGenerate a new Rclone configuration file.

console$ rclone config file

Your output should be similar to the one below:

Configuration file doesn't exist, but rclone will use this path: /home/ubuntu/.config/rclone/rclone.confOpen the Rclone configuration file.

console$ nano ~/.config/rclone/rclone.conf

Add the following Rclone configuration to the file used to access the Vultr instance. Replace

Vultr-ip-addresswith the public IP of your Vultr instance.ini[vultr] type = sftp host = Vultr-ip-address user = linuxuser key_file = ~/.ssh/vultr_migration

Save and exit the file.

Verify the connection by running the following command, which returns the list of directories present in the Vultr instance.

console$ rclone lsd vultr:

Your output should be similar to the one below:

-1 2025-02-15 13:57:44 -1 .sshTransfer all the volume data from Amazon EBS to Vultr Block Storage.

console$ rclone copy /mnt/ebs vultr:/mnt/blockstorage --progress --stats=1s

In the above command:

/mnt/ebs: Source EBS volume.vultr:/mnt/blockstorage: Destination Vultr Block Storage volume.--progress: Displays transfer progress.--stats=1s: Updates transfer statistics every second.

The migration process may take some time to complete depending on the size of the EBS volume, network speed, and the number of files being transferred. Larger volumes may take several hours to complete.

Your output should be similar to the one below:

Transferred: 200 MiB / 200 MiB, 100%, 20.000 MiB/s, ETA 0s Transferred: 6 / 6, 100% Elapsed time: 11.1sIf you have multiple EBS volumes to migrate, execute the sameNoterclone copycommand for each volume.

Test the Vultr Block Storage Volume

After migrating the source volume data, it's important to test the data integrity and ensure that no files were corrupted or omitted during the migration process. Follow the steps below to verify data integrity and confirm that the contents of the Vultr Block Storage volume match those of the source Amazon EBS volume.

$ rclone check /mnt/ebs vultr:/mnt/blockstorage --one-way

The above command compares the size and file hash (MDA5 and SHA1) in the source EBS volume against the destination Vultr Block Storage volume. The --one-way flag checks that all files in the source are present in the destination.

Your output should be similar to the one below:

2025/02/15 13:57:44 NOTICE: sftp://linuxuser@192.0.2.0:22//mnt/blockstorage: 0 differences found

2025/02/15 13:57:44 NOTICE: sftp://linuxuser@192.0.2.0:22//mnt//blockstorage: 6 matching filesCutover Applications to Vultr Block Storage

Cutting over to Vultr Block Storage after migrating your EBS volume allows you to transfer your applications and services. Follow the recommendations below when the migration is successful and cut over your applications or services to Vultr Block Storage.

- Ensure the migrated data has the correct ownership and permissions required by the application.

- Log in to your DNS provider and update DNS records to point to the Vultr instance’s public IP address.

- Detach and destroy your Amazon EBS volume if all the files and folders are migrated to the Vultr Block Storage.

- Migrate all the applications and services to the destination Vultr Block Storage volume to complete the cut over traffic.

Conclusion

You have migrated an Amazon Elastic Block Store (EBS) volume to Vultr Block Storage using Rclone. You can now manage your storage needs with Vultr Block Storage, resize volumes when you need more space, and attach volumes to different instances. For additional information about Vultr Block Storage, refer to the official documentation.