Vultr Serverless Inference allows you to run inference workloads for large language models such as Mixtral 8x7B, Mistral 7B, Meta Llama 2 70B, and more. Using Vultr Serverless Inference, you can run inference workloads without having to worry about the infrastructure, and you only pay for the input and output tokens.

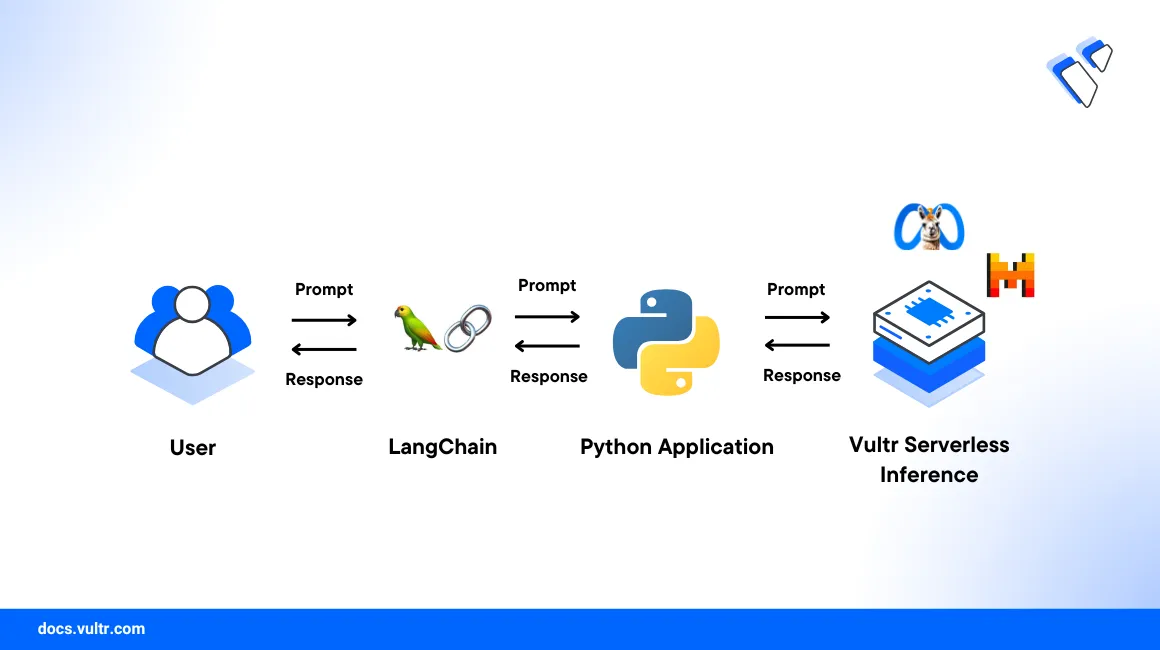

This guide demonstrates step-by-step process to start using Vultr Serverless Inference in Python with Langchain.

Prerequisites

Before you begin, you must:

- Create a Vultr Serverless Inference Subscription

- Fetch the API key for Vultr Serverless Inference

- Python 3.10 or later

Set Up the Environment

Create a new project directory and navigate to the project directory.

console$ mkdir vultr-serverless-inference-python-langchain $ cd vultr-serverless-inference-python-langchain

Create a new Python virtual environment.

console$ python3 -m venv venv $ source venv/bin/activate

Install the required Python packages.

console(venv) $ pip install langchain-openai

Inference via Langchain

Langchain provides a Python SDK to run inference workloads for Vultr Serverless Inference. You can use the langchain-openai package to make the API calls.

Create a new Python file name

inference_langchain.py.console(venv) $ nano inference_langchain.py

Add the following code to

inference_langchain.py.pythonimport os from langchain_openai import ChatOpenAI from langchain_core.messages import HumanMessage, SystemMessage api_key = os.environ.get('VULTR_SERVERLESS_INFERENCE_API_KEY') # Set the model # List of available models: https://api.vultrinference.com/v1/chat/models model = '' messages = [ HumanMessage(content="What is the capital of India?"), ] client = ChatOpenAI(openai_api_key=api_key, openai_api_base='https://api.vultrinference.com/v1' model=model) llm_response = chat.invoke(messages) print(llm_response.content)

Run the

inference-langchain.pyfile.console(venv) $ export VULTR_SERVERLESS_INFERENCE_API_KEY=<your_api_key> (venv) $ python inference-langchain.py

Here, the

inference_langchain.pyfile uses thelangchain-openaipackage to run inference workloads for Vultr Serverless Inference. Langchain uses Langchain Expression Language (LCEL) for defining different types of messages such asHumanMessageandSystemMessage. For more information, refer to the Langchain documentation.

Conclusion

In this guide, you learned how to use Vultr Serverless Inference in Python with Langchain. You can now integrate Vultr Serverless Inference into your Python applications that uses Langchain to generate completions for large language models.