Yi Vision Language Model Inference Workload on Vultr Cloud GPU

Introduction

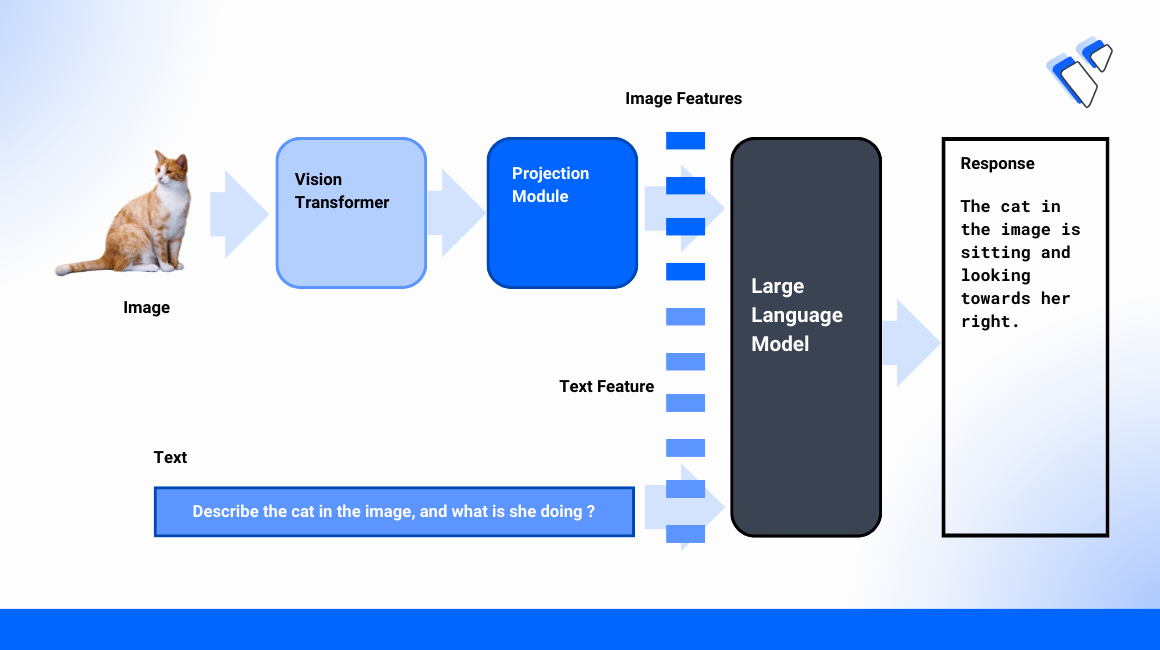

Yi Vision Language (Yi-VL) model is an open source model with the capability to perform multi-round conversations given an image input, it has the comprehension ability to read text from images and perform translations. The Yi-VL model is a multi-model version of the Yi LLM series, the former comes with two checkpoints that are 6B and 34B, and has outperformed all existing open-source MMMU and CMMMU models.

This guide explains the steps to perform inference with the model, following the steps you can create conversations with the models or just ask single questions for a detailed answer about the image input.

Prerequisites

- Deploy a fresh Ubuntu 22.04 A100 Vultr Cloud GPU server.

- Securely access the server using SSH as a non-root sudo user.

- Update the server.

Set Up The Environment

In this section you are to install the dependencies, clone the model repository, and download the model to perform inference workloads in the later section on the Yi-VL-6B and 34B models.

Clone the Yi repository

console$ git clone https://github.com/01-ai/Yi.git $ cd Yi/VL

Install the Python dependencies from the

requirements.txtfile.console$ pip install -r requirements.txt

Download the Yi Vision Language Model.

console$ python3 -c "from huggingface_hub import snapshot_download; print(snapshot_download(repo_id='01-ai/Yi-VL-6B'))"

Copy the path in the console, printed after the model is downloaded.

Similarly if you want to download the 34B model.

console$ python3 -c "from huggingface_hub import snapshot_download; print(snapshot_download(repo_id='01-ai/Yi-VL-34B'))"

Export the model path variable.

console$ export MODEL_PATH = "actual/model/path"

Make sure to replace the

actual/model/pathwith the path you copied earlier in the section.Export the image path variable

console$ export IMAGE_PATH = "actual/image/path"

Execute Inference Workloads on Yi Vision Language Model

In this section, you are to perform inference on the downloaded model via 2 ways. At first, you are to perform single inference with the model using an image input, single inference allows the user to ask one question at a time and you will not be able to make conversation with the model. In the later steps, you are to perform inference in a conversational mode where you can ask any number of questions with the model about the image input provided.

Perform single inference with

single_inferencescript.console$ python3 single_inference.py --model-path MODEL_PATH --image IMAGE_PATH --question <question>

Make sure to replace

<question>with the actual prompt, for example:What is in the image? What is the object doing in the image?Perform multi inference using the

cli.pyscript.console$ python3 cli.py --model-path MODEL_PATH --image IMAGE_PATH

On the successful execution of the script, the model will prompt you for a question, below is an example conversation:

Human: What is in the image? AI: cats. Humans: What are the cats doing in the image? AI: eating. Humans: What are the cats in the image eating? AI: cat food.

Yi Vision Language Model Inference via Web Interface

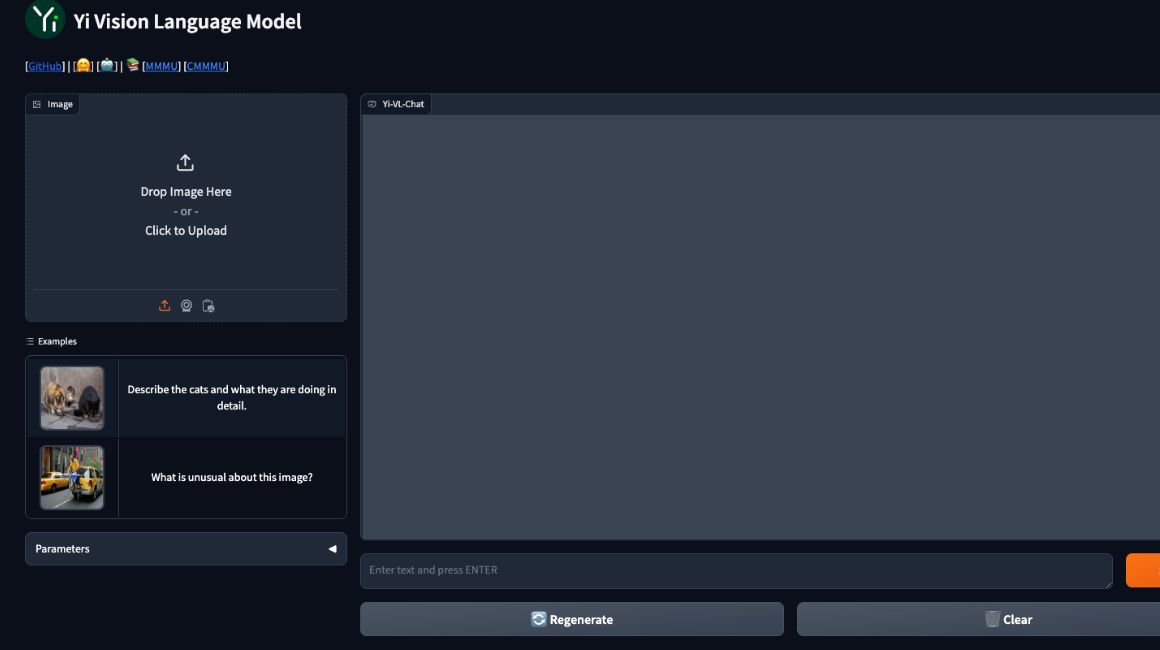

In this section, you are to perform multi inference using a Gradio web interface where you can drag and drop images, upload images, or use the sample images provided

Allow incoming connections on port

8111.console$ sudo ufw allow 8111

Run the web demonstration script.

console$ python3 web_demo.py --model-path MODEL_PATH --server-name 0.0.0.0

Visit

http://SERVER_IP:8111to perform inference with the Gradio interface. The web interface should look like the one below.

VRAM Consumption and Requirements

The VRAM consumptions for both the checkpoints of the model are as follows:

- Yi-VL-6B model : 2.2 GB

- Yi-VL-34B model : 9 GB

To execute inference workloads on both checkpoints you need at least an NVIDIA 1/3 A40, moving upwards with more GPU RAM or a more capable GPU such as A100 or H100 can decrease the response generation time.

Use Cases and Yi Vision Language Model Limitations

These are potential use cases for vision language models in general, they are not specific to the model or any of its checkpoints used in this guide.

Use cases:

Automated Image-Text Generation: Automation of generating text from an image or vice versa helps in content creation on various platforms and storytelling.

Enhanced Search and Recommendation Systems: By understanding user inputs that include both text and image search engines may retrieve more accurate and relevant results.

Assistive Technologies: Helping individuals with visual impairments by describing the content of images or providing information through a combination of text and audio.

Yi VL Model Limitations:

- The model is not capable of accepting multiple images as input rather it can only take an input of a single image.

- The model may hallucinate in cases if the input image has too many objects and the model incorrectly identifies those objects.

- Low-resolution image inputs in the Yi-VL model may result in information loss.

Conclusion

In this guide you utilized the vision language capabilities of the YI-VL models with different checkpoints, you performed inference via 3 modes in which you used two techniques that are single inference and multi inference to get a response from the model.