Introduction

TensorFlow Serving is a flexible, high-performance serving system for machine learning models designed for production environments. This guide shows how to train a simple model and serve this model with TensorFlow Serving using Docker. It also shows how to serve many models simultaneously.

Prerequisites

- Working knowledge of Python.

- Properly installed and configured Python toolchain, including pip.

Deploy the Docker instance

Use Vultr One-Click Docker to deploy your Docker instance. This guide uses the Ubuntu version.

After the deployment, log in on the server via SSH

Update the machine and reboot the machine to apply the updates

# apt-get update && apt-get dist-upgrade -y

# rebootIt is recommended to use virtual environments while working on Python projects. Install the package to use Python venv module or use any other method of your preference.

On Ubuntu 20.04:

# apt-get install python3.8-venvOn Ubuntu 22.04:

# apt-get install python-3.10-venvAfter rebooting, switch to docker user and download the TensorFlow Serving image.

# su -l docker -s /usr/bin/bash

$ docker pull tensorflow/servingCreate the Example Model

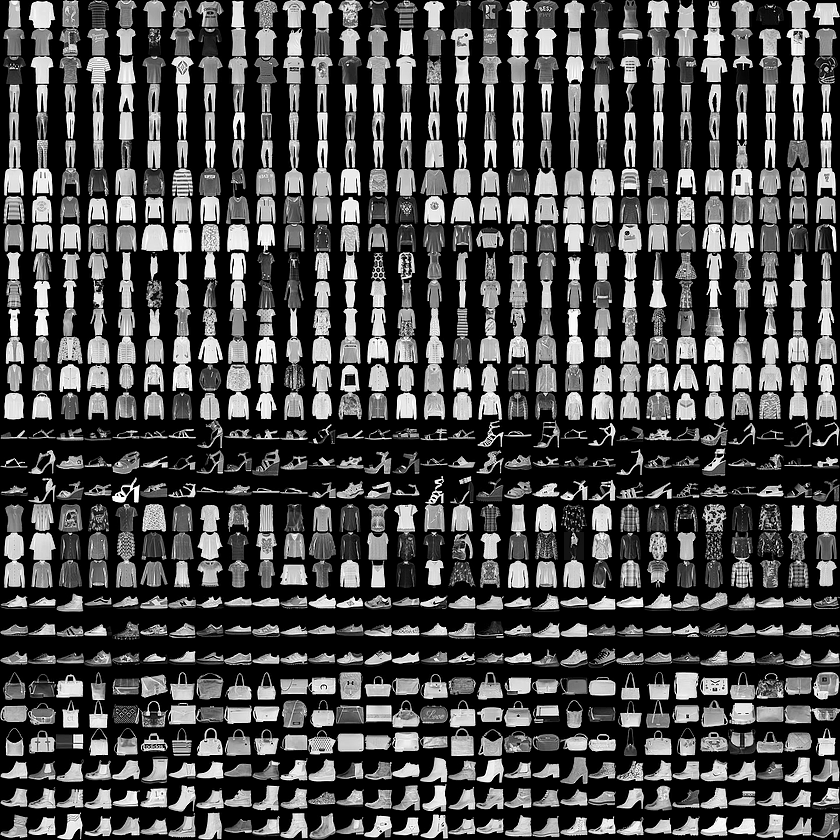

Train a model using the Fashion-MNIST dataset which consists of 60k training examples and 10k test examples with ten different classes, where each sample is a 28x28 pixels grayscale image of a piece of clothing.

This guide does not detail the model training or do any parameter tuning. The focus is on TensorFlow Serving rather than the model training.

Set up a virtual environment and install the necessary Python packages.

$ python3 -m venv tfserving-venv

$ . tfserving-venv/bin/activate

$ pip install tensorflow requestsCreate a file model.py with the following code, which loads and prepares the data.

import tensorflow as tf

from tensorflow import keras

# load data

fashion_mnist = keras.datasets.fashion_mnist

(train_data, train_labels), (test_data, test_labels) = fashion_mnist.load_data()

class_names = ["T-shirt/top", "Trouser", "Pullover", "Dress", "Coat",

"Sandal", "Shirt", "Sneaker", "Bag", "Ankle boot"]

# scale data values to [0,1] interval

train_data = train_data / 255.0

test_data = test_data / 255.0

# reshape data to represent 28x28 pixels grayscale images

train_data = train_data.reshape(train_data.shape[0], 28, 28, 1)

test_data = test_data.reshape(test_data.shape[0], 28, 28, 1)Build a CNN with a single convolutional layer (8 filters of size 3x3) and an output layer with ten units.

model = keras.Sequential([

keras.layers.Conv2D(input_shape=(28,28,1), filters=8, kernel_size=3,

strides=2, activation="relu", name="Conv1"),

keras.layers.Flatten(),

keras.layers.Dense(10, name="Dense")

])Train the model.

epochs = 3

model.compile(optimizer="adam",

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=[keras.metrics.SparseCategoricalAccuracy()])

model.fit(train_data, train_labels, epochs=epochs)Save the model.

import os

MODEL_DIR = "/home/docker/tfserving-fashion"

VERSION = "1"

export_path = os.path.join(MODEL_DIR, VERSION)

tf.keras.models.save_model(

model,

export_path,

overwrite=True,

include_optimizer=True,

save_format=None,

signatures=None,

options=None

)Run the code in model.py.

$ python model.py

...

Epoch 1/3

1875/1875 [==============================] - 5s 3ms/step - loss: 0.5629 - sparse_categorical_accuracy: 0.8033

Epoch 2/3

1875/1875 [==============================] - 5s 3ms/step - loss: 0.4119 - sparse_categorical_accuracy: 0.8566

Epoch 3/3

1875/1875 [==============================] - 5s 3ms/step - loss: 0.3708 - sparse_categorical_accuracy: 0.8696You can visualize the model information with the command saved_model_cli.

$ saved_model_cli show --dir ~/tfserving-fashion/1 --allStart the server

Start the server using Docker.

$ docker run -t --rm -p 8501:8501 \

-v "/home/docker/tfserving-fashion:/models/fashion" \

-e MODEL_NAME=fashion \

tensorflow/serving &How to Make Queries

Create a file query.py with the following code, which loads the data, sets the query header, and defines query data as the first example in the test dataset.

import json

import requests

import numpy as np

from tensorflow import keras

fashion_mnist = keras.datasets.fashion_mnist

(train_data, train_labels), (test_data, test_labels) = fashion_mnist.load_data()

class_names = ["T-shirt/top", "Trouser", "Pullover", "Dress", "Coat",

"Sandal", "Shirt", "Sneaker", "Bag", "Ankle boot"]

# scale data values to [0,1] interval

train_data = train_data / 255.0

test_data = test_data / 255.0

# reshape data to represent 28x28 pixels grayscale images

train_data = train_data.reshape(train_data.shape[0], 28, 28, 1)

test_data = test_data.reshape(test_data.shape[0], 28, 28, 1)

data = json.dumps({"instances": test_data[0:1].tolist()})

headers = {"content-type": "application/json"}Make the query and get the response. Remember to change the address to your server IP or FQDN.

json_response = requests.post("http://<<SERVER IP/FQDN>>:8501/v1/models/fashion:predict",

data=data, headers=headers)

predictions = json.loads(json_response.text)["predictions"]The predictions variable contains a list with the probabilities of the object query belonging to each of the 10 classes. The greatest of these values is the classification made by the model. The function np.argmax returns the model classification from the predictions variable.

print("Real class:", class_names[test_labels[0]])

print("Predicted class:", class_names[np.argmax(predictions[0])])Run query.py, and you should see the result of this query.

$ python3 query.py

...

Real class: Ankle boot

Predicted class: Ankle bootYou can also query for more than one example at each request. Change the data variable to, for example, the five first samples in test data and the output of the query.py to show the classification of each of the five queries.

data = json.dumps({"instances": test_data[0:5].tolist()})

...

for i in range(len(predictions)):

print("Query", str(i))

print("Real class:", class_names[test_labels[i]])

print("Predicted class:", class_names[np.argmax(predictions[i])])Running query.py again gives the result of the query with five examples.

$ python3 query.py

...

Query 0

Real class: Ankle boot

Predicted class: Ankle boot

Query 1

Real class: Pullover

Predicted class: Pullover

Query 2

Real class: Trouser

Predicted class: Trouser

Query 3

Real class: Trouser

Predicted class: Trouser

Query 4

Real class: Shirt

Predicted class: ShirtServing more than one model

TensorFlow Serving is also capable of serving more than one model simultaneously. This time, use some of the pre-trained models available in the TensorFlow Serving GitHub repository.

Install Git if it is not installed yet.

# apt-get install gitAnd clone the repository.

$ git clone https://github.com/tensorflow/servingCreate a file named models.config with the content below, which configures two models defining the name, directory relative to the Docker container running your server, and model platform for each one.

model_config_list {

config {

name: 'half_plus_two'

base_path: '/models/saved_model_half_plus_two_cpu'

model_platform: 'tensorflow'

}

config {

name: 'half_plus_three'

base_path: '/models/saved_model_half_plus_three'

model_platform: 'tensorflow'

}

}The models compute the half part of a number and add two and three, respectively.

Start the server loading this configuration file and mounting the volume with the pre-trained models.

$ docker run -t --rm -p 8501:8501 \

-v "/home/docker/serving/tensorflow_serving/servables/tensorflow/testdata/:/models/" \

-v "/home/docker/models.config:/tmp/models.config" \

tensorflow/serving \

--model_config_file=/tmp/models.configYou can view each model status information with an HTTP GET request on the browser or using curl.

$ curl http://<<SERVER IP/FQDN>>:8501/v1/models/half_plus_two

{

"model_version_status": [

{

"version": "123",

"state": "AVAILABLE",

"status": {

"error_code": "OK",

"error_message": ""

}

}

]

}

$ curl http://<<SERVER IP/FQDN>>:8501/v1/models/half_plus_three

{

"model_version_status": [

{

"version": "123",

"state": "AVAILABLE",

"status": {

"error_code": "OK",

"error_message": ""

}

}

]

}And request predictions for both models with an HTTP POST request using curl by running:

$ curl -d '{"instances": [1.0, 2.0, 3.0]}' \

-X POST http://<<SERVER IP/FQDN>>:8501/v1/models/half_plus_two:predict

{

"predictions": [2.5, 3.0, 3.5]

}

$ curl -d '{"instances": [1.0, 2.0, 3.0]}' \

-X POST http://<<SERVER IP/FQDN>>:8501/v1/models/half_plus_three:predict

{

"predictions": [3.5, 4.0, 4.5]

}Conclusion

This guide covered how to train a TensorFlow model and deploy TensorFlow Serving to serve this and many models simultaneously with Docker.

More information

To learn more about TensorFlow Serving, go to the developer documentation pages:

No comments yet.