Introduction

Welcome to the fascinating world of generative Artificial Intelligence (AI). This course delves into the mechanisms that empower machines to create, innovate, and even mimic human-like creativity.

As developers, you already harness the power of coding to bring ideas to life. After this course, you'll add the functionalities of generating new content like art, text, or even music into your applications using the power of AI. Right from the foundational principles of neural networks to the intricacies of models like Llama and Stable Diffusion, this course equips you with the knowledge and tools to integrate generative intelligence into your projects.

Evolution of Generative AI

Generative AI hit the headlines after the launch of ChatGPT in November 2022. The technology grew in popularity and became mainstream in 2023. However, generative AI did not appear out of nowhere and is more of an evolution than a revolution.

As a developer who wants to leverage the power of generative AI to build next-generation applications, you must understand the history of AI and the key milestones that led to generative AI.

Traditional Machine Learning

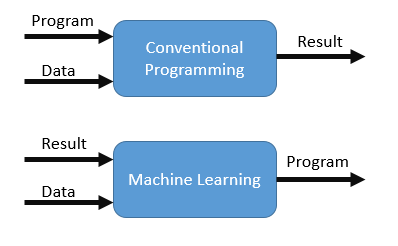

Machine Learning (ML) is a subset of artificial intelligence that focuses on developing algorithms that enable computers to learn from and make data-driven decisions.

While deep learning and neural networks have taken center stage in recent years, they are just a fraction of the ML universe. Before these technologies became mainstream there was traditional or classical machine learning that is still widely applicable.

At its core, traditional machine learning involves algorithms that learn patterns from data and then use these patterns to predict future data or make other kinds of decisions. A machine learning model is an entity that learns patterns from existing data to perform predictions when given new input.

One of the most crucial aspects of machine learning is feature engineering, which involves selecting and creating the most relevant input variables that influence the learning ability of a model and, thus, the accuracy of the predictions.

Traditional machine learning deals with algorithms such as linear regression, logistic regression, decision trees, naive Bayes, and k-means clustering. Traditional machine learning remains an indispensable tool for a data scientist. It needs less computing power and the training process is not resource-intensive.

Deep Learning and Neural Networks

Deep learning is a transformative and influential subset of artificial intelligence. Powering applications from voice recognition to autonomous vehicles, it offers human-like functionalities to computers. Neural networks also play a major role in deep learning and their workings are inspired by the human brain.

Deep learning is a subset of machine learning that employs neural networks with many layers (hence the word "deep") to analyze various data factors. These networks can learn and make independent decisions by analyzing large amounts of data and identifying patterns.

Neural Network Architecture

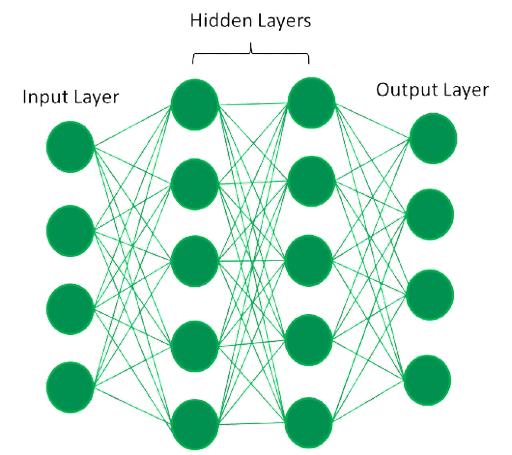

A neural network architecture comprises the following functions:

Neurons: Neurons form the basic units of a neural network. Each neuron receives input, processes it, and passes its output to the next layer.

Layers: These are the different levels of a neural network. There are three main types of layers: input layer, hidden layer, and output layer. The input layer receives the input data, the hidden layer performs the processing, and the output layer generates the output. See the following illustration:

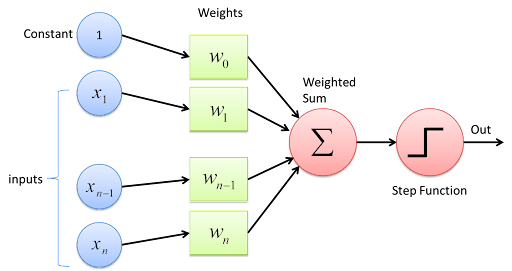

Weights and biases: These are parameters within the network that transform input data within the network layers. A neural network learns by adjusting the

weightsandbiasesto decrease the difference between the predicted output and the actual targeted values.

Activation functions: These functions are used to determine the output of a neuron. Some common examples include the ReLU, sigmoid, and tanh functions. Deep learning models learn by iteratively processing a dataset and adjusting the internal parameters to reduce the prediction error. These functions rely on two techniques:

Forward propagation: Calculates the predicted output using the parameters of the active model. Once this output is determined, the loss calculation measures the difference between the predicted output and the actual target.

Backpropagation: Refines the model further by adjusting the weights and biases to decrease this loss.

In addition, optimization algorithms such as Gradient Descent and its variants like Stochastic, Mini-batch, and Adam update the model's weights, ensuring better and more accurate predictions.

The following are the most popular deep-learning network architectures:

- Convolutional Neural Networks (CNNs)

- Recurrent Neural Networks (RNNs)

- Long Short-Term Memory (LSTM)

- Gated Recurrent Units (GRU)

Unlike traditional machine learning, deep learning depends on large amounts of data for training. It demands significant computational resources, especially for training large models. Deep neural networks can often act as "black boxes" making it challenging to understand their decision-making processes.

Generative AI

Deep learning and neural networks serve as the foundations for generative AI. Some recent advances and research in deep learning have resulted in the rise of generative AI.

Generative AI is about building models that generate new data that mimics some given data. Rather than just predicting a label or a value, generative models output a sample drawn from the same distribution as the training data.

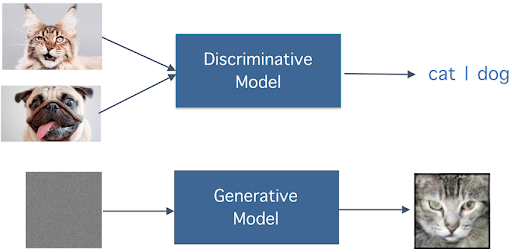

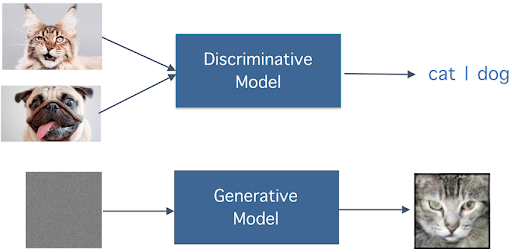

Imagine having a set of cat photos. A typical neural network might classify whether a given image is a cat or not. Conversely, a generative model would try to create a new image that looks like a cat, even though it's not a replica of any cat photo it has seen before.

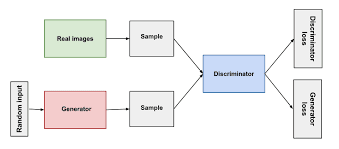

As discussed earlier, generative AI extends neural networks with advanced and complex architectures capable of producing and recreating content. The most popular generative models like Generative Adversarial Networks (GANs) or Variational Autoencoders (VAEs), leverage deep neural network structures.

GANs include two networks: a generator and a discriminator. The generator tries to produce fake data, while the discriminator tries to distinguish between real and fake data. Over time, the generator gets so good that the discriminator can't tell real from fake.

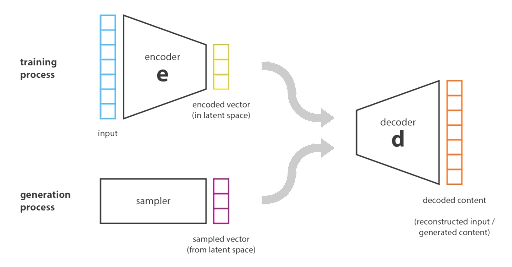

Contrary to GANs, VAEs work by encoding data into a lower-dimensional space and then decoding it back. They ensure that the encoded data is close to the original. Dduring this process they can generate new similar data.

Unlike typical neural networks that adjust weights based on prediction errors, generative models often have different training dynamics. For instance, GANs involve a "game" where the generator and discriminator compete, leading the model to a point where it generates data almost indistinguishable from real samples.

Discriminative AI Versus Generative AI

Traditional machine learning and deep learning models are categories of discriminative AI. They typically deal with models that discriminate the input data as opposed to generative AI models that generate new data similar to the input.

Discriminative AI and Generative AI are two sides of the machine learning coin, each with a distinct approach and set of applications.

Discriminative AI

Discriminative models learn to distinguish between different classes or labels of data. They map input data to a specific output. These models capture the boundaries between classes. Instead of modeling how each class generates data, they focus on modeling the decision boundary between the classes.

Consider a dataset of images containing cats and dogs. A discriminative model, like a Convolutional Neural Network (CNN), is trained to label an input image as either a cat or a dog. It learns the features and patterns that distinguish cats from dogs and vice versa.

Discriminative models are trained to classify data into a specific class or predict a discrete value. Models that can perform face recognition or predict the price of a house are examples of discriminative models.

Discriminative models are mostly trained through supervised learning. It is a common approach used in deep learning, where the model is trained to make predictions based on input/output pairs. It's called "supervised" because much like a student learning under the supervision of a teacher, the model learns from labeled data. When neural networks are trained based on unsupervised learning, the models can be adapted for tasks like clustering where the aim is to separate data into distinct groups without pre-existing labels.

Generative AI

Generative models learn the underlying probability distribution of the data. They generate new data samples similar to the input data. These models try to understand how the data in each class is generated. By learning the distribution, they can produce new samples from the same distribution.

Some examples of generative AI models include:

Text Generation: Given a dataset of Shakespeare's writings, a generative model like a Recurrent Neural Network (RNN) or Transformer can produce new sentences or a full passage that mimics Shakespeare's style. The model doesn't derive the output from any existing sentence from the original works but rather generates new text based on the patterns and structures observed.

Image Creation: Generative Adversarial Networks (GANs) are popular for image generation tasks. Trained on a dataset of human faces, a GAN can generate images of entirely new faces that it has never seen before but which look convincingly real.

The generative AI models are often trained based on self-supervised learning, which is a type of machine learning where the data provides the supervision itself. In other words, it's a method where the model learns to predict part of the input data from other parts of the input data.

For example, in a self-supervised learning task utilizing images, the model can predict a part of the image given the rest or predict the color version of a black-and-white image.

The primary difference between the two approaches lies in their final goal. Discriminative models trained using supervised or unsupervised techniques aim to classify and distinguish between classes, focusing on their differences. In contrast, generative models aim to understand and replicate the data's structure, focusing on generating new samples that resemble the original data. These models are trained using the self-supervised learning technique.

Key Factors Contributing to the Rise of Generative AI

Several factors have contributed to the rise of generative AI as described below:

- Advancements in deep learning algorithms: New algorithms and architectures especially in deep learning have led to significant improvements in the capabilities of generative models. For instance, Generative Adversarial Networks (GANs) have been a game-changer in the field enabling the generation of highly realistic images, audio, and even video.

- Availability of large-scale datasets: The rise of big data has provided the fuel for training more sophisticated generative models. These models often require large amounts of data to learn the underlying distribution effectively.

- Increased computational power: The advancement in hardware technology specifically GPUs, TPUs, and cloud-based distributed computing has made it practical to train complex, multi-layered deep neural networks. These advancements have also made it possible to work with larger datasets and more complicated models.

- Open-source software and libraries: Libraries like TensorFlow, PyTorch, and Keras allow developers to build, train, and deploy generative models. They offer high-level and flexible APIs that are instrumental in democratizing AI and fostering a culture of shared knowledge in the AI community.

- Wide range of use cases: Generative AI has potential applications in many fields including art, music, entertainment, fashion, healthcare, and more. This versatility increases interest in the field and drives further research and development.

Application and Use Cases of Generative AI

Generative AI is useful in a wide range of applications across many fields. Here are some use cases along with sample applications:

- Text generation: AI models can generate human-like text given some prompt. For instance, a Large Language Model (LLM) powers the popular OpenAI ChatGPT AI system. ChatGPT can write essays, answer questions, create written content for websites, and even write poetry.

- Art and design: Generative AI can craft new pieces of digital art and assist in design. For instance, Midjourney uses generative AI to create and transform images in unique and artistic ways. Stable Diffusion, a well-known text-to-image model released in 2022, is used by many image-generation tools.

- Music composition: Generative models like OpenAI's MuseNet and Meta's AudioCraft, can create original pieces of music by learning from a wide range of musical styles and compositions.

- AI-assisted code: Large language models trained on publicly available code repositories are used to build AI assistants for developers. GitHub Copilot is a popular tool that integrates with tools like Visual Studio Code to automatically generate code for mainstream programming languages.

- Drug discovery: Generative models can create practical molecular structures for potential new drugs. For example, the Insilico medicine generative model creates new molecules to discover new drugs.

- Image and video enhancement: Generative AI can enhance the quality of images and videos. For instance, FaceApp uses generative models to transform faces in photos and can alter a person's age, gender, or hairstyle.

- Fashion and retail: Generative AI can create new fashion designs and visualize clothes on different body types. Stitch Fix uses generative models to create new fashion designs based on user preferences and trends.

All the above applications are powered by the foundation models. In the next section of this series, you'll take a closer look at the foundation models in detail.