How to Use the Embeddings Endpoint in Vultr Serverless Inference

Updated on November 27, 2024Vultr Serverless Inference embeddings endpoint transforms text into vector representations using advanced AI models. This service allows users to integrate semantic understanding into their applications, facilitating tasks such as similarity search and natural language processing by converting text into structured, machine-readable formats. By leveraging this feature, you can enhance your application's ability to interpret and process natural language, enabling more sophisticated and accurate AI-driven functionalities.

Follow this guide to utilize the embeddings endpoint on your Vultr account using the Vultr Customer Portal.

- Vultr Customer Portal

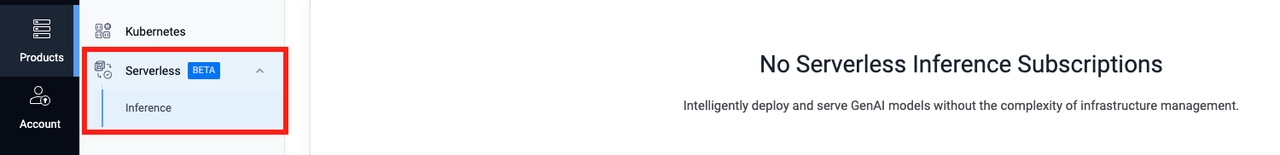

Navigate to Products, click Serverless, and then click Inference.

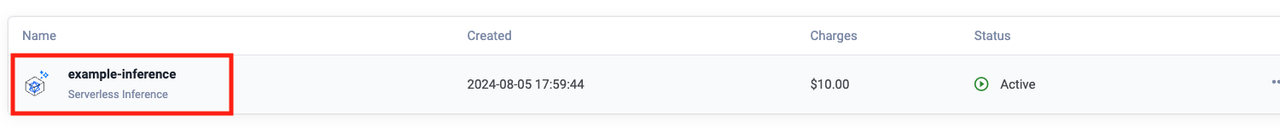

Click your target inference service to open its management page.

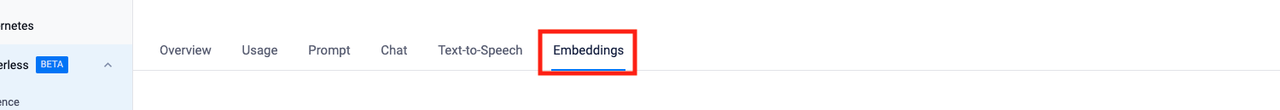

Open the Embeddings page.

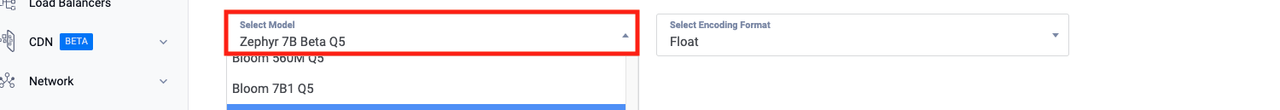

Select a preferred model.

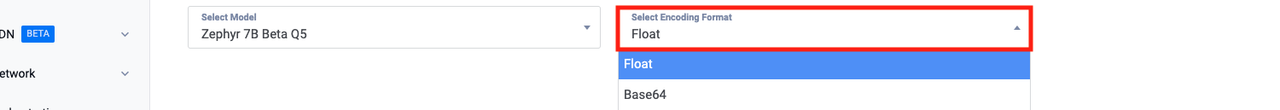

Selecting an Encoding Format.

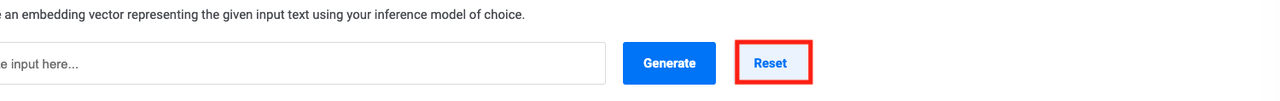

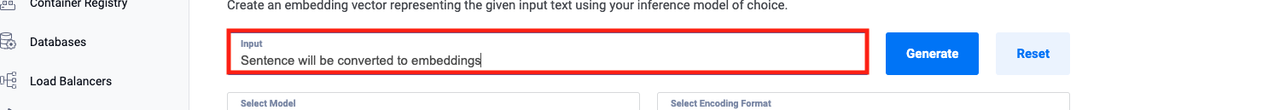

Provide an input.

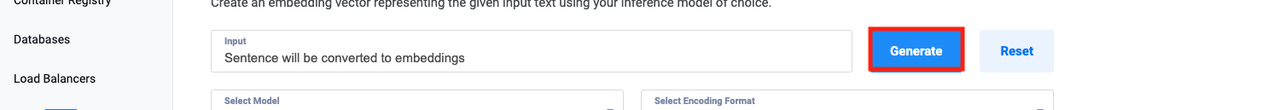

Click Generate to create embeddings.

Click Reset to create embeddings for a new input.