Introduction

The Vultr Load Balancer is a fully managed solution to distribute traffic across multiple backend servers. If you are new to Vultr Load Balancers, we recommend reading the Load Balancer Quickstart Guide first.

In this guide, you'll learn the details of each Vultr virtual Load Balancer feature and how to configure them. Features are listed alphabetically.

Algorithm

You'll find this feature in two places; it's in the Load Balancer Configuration section of the Add Load Balancer page, and also on the Configuration menu of the Manage Load Balancer page.

There are two available load balancer types for the algorithm:

- Roundrobin selects servers in turn, without regard for traffic. This is the default algorithm.

- Leastconn selects the server with the least number of connections. This is a good choice if your application has long-running sessions.

Firewall Rules

This is an optional feature. Vultr Load Balancers can define an internal firewall with similar capabilities to the stand-alone Vultr Firewall. You'll find this feature in two places; it's on the Add Load Balancer page, and also on the Networking menu of the Manage Load Balancer page.

Add firewall rules to the load balancer if you need to restrict the forwarding rule traffic.

Note: Ports 65300 to 65310 are not available because they are used internally by the load balancer.

Cloudflare

If you enter cloudflare as your source instead of an IP address range, the load balancer firewall will automatically allow Cloudflare IPs.

Force HTTP to HTTPS

This will force all HTTP traffic to redirect to HTTPS. Before setting this option, install an SSL certificate on the load balancer and configure HTTPS forwarding.

You'll find this feature in two places; it's on the Add Load Balancer page, and also on the Configuration tab of the Manage Load Balancer page.

Forwarding Rules

Forwarding rules define how to map a Load Balancer port to an Instance port. You may choose HTTP, HTTPS, or TCP protocol. For example, you can forward HTTP port 80 on the load balancer to port 8000 on the instances. You'll find this feature in two places; it's on the Add Load Balancer page, and also on the Forwarding Rules menu of the Manage Load Balancer page.

You can create a maximum of 15 forwarding rules.

Notes about HTTPS Support

Load balancers support HTTPS via two methods: You can either install your SSL certificate on the load balancer or install the certificate on each instance and transparently pass the session via TCP. Choose the option appropriate for your situation.

Option 1: SSL Offloading / SSL Termination - Install SSL certificate on the load balancer

In this configuration, users connect to the load balancer via HTTPS, and the load balancer proxies the connection to the instances. This is known as SSL Offloading or SSL Termination.

Choose HTTPS protocol for the load balancer. The configuration screen prompts you to install the SSL certificate.

The instance protocol can be HTTPS or HTTP, depending on your requirements.

Option 2: SSL Passthrough - Install SSL certificate on the instances

In this configuration, the load balancer performs SSL Passthrough, which passes the TCP session to the instances for HTTPS handling.

Choose TCP protocol for the load balancer and install your SSL certificate on each backend instance.

Health Checks

Health checks verify that your instances are healthy and reachable. If an instance fails the health check, the load balancer cuts traffic to that instance.

You'll find this feature in two places; it's on the Add Load Balancer page, and also on the Health Checks menu of the Manage Load Balancer page.

Use these parameters to configure a health check.

Protocol

Valid protocols are HTTP, HTTPS, and TCP.

- The HTTP and HTTPS health checks consider any 2XX success return code a success. Any other return code is a failure.

- The TCP health check considers an open port a success. A closed port is a failure.

Port

The TCP, HTTP, or HTTPS port to test.

Interval between health checks

The default interval between health checks is 15 seconds.

Response timeout

The number of seconds the virtual Load Balancer waits for a response. If no response is received before the timeout, the health check fails. The default value is five seconds.

Unhealthy Threshold

The number of times an instance must consecutively fail a health check before the Load Balancer stops forwarding traffic to it. The default value is five failures.

Failures must be consecutive before the load balancer considers the instance unhealthy. For example, if the Unhealthy Threshold is five, and the instance fails four times, then succeeds one time, then fails four times, the instance is still considered healthy.

Healthy Threshold

The number of times an instance must consecutively pass a health check before the Load Balancer forwards traffic to it. The default value is five successes.

The instance must pass the health check this many times, consecutively. For example, if the Healthy Threshold is five, and the unhealthy instance succeeds four times, then fails one time, then succeeds four times, the instance is still considered unhealthy.

HTTP Path

The HTTP path that the load balancer should test.

- The default value is

/. - This option is only present if the protocol is HTTP or HTTPS.

- HTTP path allows only numbers, letters, and the following characters:

-_%+.=&/

For example, if your instances have a test page at:

https://www.example.com/path/to/my/testpage.htm... then your HTTP path should be set to:

/path/to/my/testpage.htmLabel

This is a descriptive label that identifies your load balancer.

You'll find this feature in the Load Balancer Configuration section.

Load Balancer Location

This is the first option listed on the Add Load Balancer page. The load balancer must be in the same location as the cloud servers you connect to it. You can filter the location list by continent.

Metrics

Metrics are available after your load balancer has been running for a few minutes. You can view your metrics on the Metrics tab of the Manage Load Balancer page.

Overview

On the Overview tab of the Manage Load Balancer page, you'll find the following information, starting at the top of the page:

- Label

- Datacenter location

- IPv4

- IPv6

- The number of healthy & unhealthy instances

- SSL Redirect status

- Current charges

- Linked instances

You can attach and detach your instances to the load balancer from this page.

Proxy Protocol

Proxy Protocol forwards client information to the backend nodes. If this feature is enabled, you must configure your backend nodes to accept Proxy protocol. For more information, see the Proxy Protocol documentation.

You'll find this feature in the Load Balancer Configuration section.

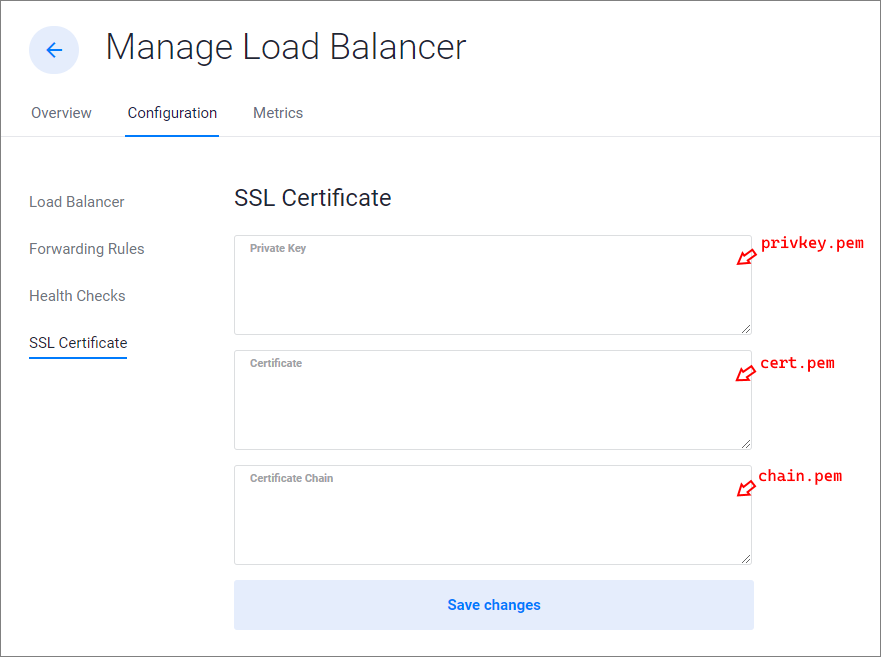

SSL Certificate

If you have added an HTTPS forwarding rule, you can add an SSL certificate to your load balancer. Enter your Private Key, Certificate, and Certificate Chain in this section. If you are using a Let's Encrypt certificate, you should have three files: privkey.pem, cert.pem, and chain.pem. Copy your certificate files into the fields as shown.

- Copy

privkey.pemto the Private Key field. - Copy

cert.pemto the Certificate field. - Copy

chain.pemto the Certificate Chain field.

For more information, see our article Use a Wildcard Let's Encrypt Certificate with Vultr Load Balancer.

You'll find this feature in two places; on the Add Load Balancer page, and also on the SSL Certificate menu of the Manage Load Balancer page.

Reverse DNS

Vultr allows you to create a reverse DNS record for your load balancer. Reverse DNS uses PTR records to map an IP address to a Fully Qualified Domain Name (FQDN). Setting reverse DNS is important for SMTP mail servers. However, the majority of other applications don't need to worry about this DNS record.

To set reverse DNS for your load balancer:

- Navigate to the Manage Load Balancer page.

- Click the Configuration tab.

- Click the Networking menu.

- The reverse DNS section is at the bottom of the page.

Sticky Sessions

Some applications require users to continue to connect to the same backend server. You must supply a cookie name when configuring sticky sessions.

The load balancer uses application-controlled session persistence for sticky sessions. Your application generates a cookie that determines the duration of session stickiness. The load balancer still issues its session cookie on top of it, but it now follows the lifetime of the application cookie.

This makes sticky sessions more efficient, ensuring that users are never routed to a server after their local session cookie has already expired. However, it's more complex to implement because it requires additional integration between the load balancer and the application.

You'll find this feature in the Load Balancer Configuration section.

VPC Network

The VPC option allows you to change the network interface of the load balancer. If your instances are attached to a VPC, you can elect to forward traffic via the VPC instead of the public network. Changing the network interface will briefly disrupt network connections to the instances.

You'll find this feature in two places; it's on the Add Load Balancer page, and also on the Networking menu of the Manage Load Balancer page.

Manage the Load Balancer via API

The Vultr API offers several Load Balancer management endpoints.

Load Balancer

- Create a new load balancer in a particular region.

- List the load balancers in your account.

- Update information for a load balancer.

Load Balancer Rules

- Create a new forwarding rule for a load balancer.

- List the forwarding rules for a load balancer.

- Get information for a forwarding rule on a load balancer.

- Delete a forwarding rule on a load balancer.

More Information

- If you are new to load-balancing network concepts, see the Vultr Load Balancer Quickstart Guide.

- The Vultr Load Balancer has its own integrated firewall; learn more in our article How to Use the Vultr Load Balancer Firewall.

- The Vultr Firewall can use a Load Balancer as an IP source. We explain more in How to Use the Vultr Firewall with a Vultr Load Balancer.

- Explore an advanced scenario with private networking and both types of firewalls in How to Configure a Vultr Load Balancer with Private Networking.

- Learn how to configure wildcard SSL on your load balancer in our guide Use a Wildcard Let's Encrypt Certificate with Vultr Load Balancer.