Introduction

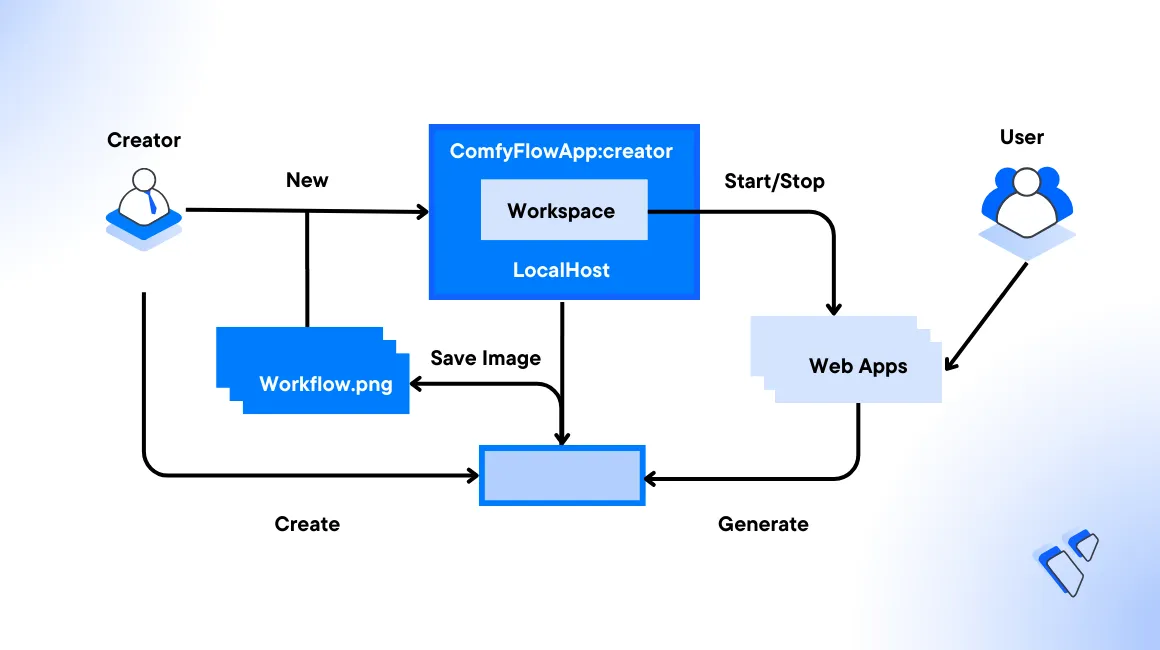

ComfyUI is an open-source, node-based application that lets you design, test, and execute diffusion model pipelines using a graphical web interface. ComfyUI runs advanced stable diffusion pipelines using a flowchart interface of interconnected nodes that support checkpoint loading, workflow re-executions, and generation from PNG files.

This article explains how to deploy ComfyUI on a Vultr Cloud GPU server. You will set up all project dependencies, install the ComfyUI Manager to manage models and run ComfyUI as a system service to run diffusion model pipelines on the server.

Prerequisites

Before you begin:

- Deploy an NVIDIA A100 Vultr Cloud GPU instance running Ubuntu with at least

20 GBGPU memory. - Set up a new domain A record pointing to the server's IP address. For example,

comfyui.example.com. - Access the server using SSH as a non-root sudo user. For example,

linuxuser. - Update the server.

Install ComfyUI

Follow the steps below to install ComfyUI in your working directory on the server.

Switch to your user home directory.

console$ cd

Clone the ComfyUI project repository using Git.

console$ git clone https://github.com/comfyanonymous/ComfyUI.git

Switch to the ComfyUI directory.

console$ cd ComfyUI

Install the required

PyTorch,numpy <2, andxformersdependency packages.console$ sudo pip install "numpy<2" torch==2.1.0+cu121 torchvision==0.16.0+cu121 torchaudio==2.1.0+cu121 --extra-index-url https://download.pytorch.org/whl xformers

The above command installs the following packages:

- torch: A machine learning library that provides a flexible and dynamic computational graph for deep learning tasks.

- torchvision: A PyTorch library used for computer vision tasks. It includes utilities for image and video datasets that handle image classification and object detection tasks.

- torchaudio: A PyTorch library used for audio processing tasks. It provides audio loading, transformations, and common audio datasets for deep learning applications.

- xformers: A Python library for implementing transformer-based models and attention mechanisms.

Install additional ComfyUI dependencies using the

requirements.txtfile.console$ pip install -r requirements.txt

Switch to the

models/checkpointsdirectory.console$ cd models/checkpoints

Open the

put_checkpoints_herefile using a text editor such asnano.console$ nano put_checkpoints_here

Add the following contents to the file.

sh# Checkpoints ### SDXL wget -c https://huggingface.co/stabilityai/stable-diffusion-xl-base-1.0/resolve/main/sd_xl_base_1.0.safetensors -P ./models/checkpoints/ #wget -c https://huggingface.co/stabilityai/stable-diffusion-xl-refiner-1.0/resolve/main/sd_xl_refiner_1.0.safetensors -P ./models/checkpoints/ # SD1.5 wget -c https://huggingface.co/runwayml/stable-diffusion-v1-5/resolve/main/v1-5-pruned-emaonly.ckpt -P ./models/checkpoints/ # SD2 #wget -c https://huggingface.co/stabilityai/stable-diffusion-2-1-base/resolve/main/v2-1_512-ema-pruned.safetensors -P ./models/checkpoints/ #wget -c https://huggingface.co/stabilityai/stable-diffusion-2-1/resolve/main/v2-1_768-ema-pruned.safetensors -P ./models/checkpoints/

Save and close the file.

The above configuration downloads the Stable Diffusion XL and Stable Diffusion 1.5 models to the ComfyUI

modelsdirectory using thewgetutility. Modify the file to include new model checkpoint URLs from sources such as Hugging Face to enable in ComfyUI.Run the file using

bashto download the specified models.console$ bash put_checkpoints_here

Output:

Collecting kornia-rs>=0.1.0 Downloading kornia_rs-0.1.7-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (1.6 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.6/1.6 MB 133.8 MB/s eta 0:00:00 Collecting cffi>=1.0 Downloading cffi-1.17.1-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (446 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 446.2/446.2 KB 103.6 MB/s eta 0:00:00 Collecting pycparser Downloading pycparser-2.22-py3-none-any.whl (117 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 117.6/117.6 KB 65.5 MB/s eta 0:00:00 Collecting propcache>=0.2.0 Downloading propcache-0.2.0-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (208 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 208.9/208.9 KB 90.2 MB/s eta 0:00:00 Requirement already satisfied: idna>=2.0 in /usr/lib/python3/dist-packages (from yarl<2.0,>=1.12.0->aiohttp->-r requirements.txt (line 10)) (3.3) Requirement already satisfied: mpmath<1.4,>=1.1.0 in /usr/local/lib/python3.10/dist-packages (from sympy->torch->-r requirements.txt (line 1)) (1.3.0) Installing collected packages: trampoline, sentencepiece, tqdm, scipy, safetensors, regex, pycparser, psutil, propcache, packaging, multidict, kornia-rs, frozenlist, einops, async-timeout, aiohappyeyeballs, yarl, huggingface-hub, cffi, aiosignal, torchsde, tokenizers, soundfile, kornia, aiohttp, transformers, spandrel Successfully installed aiohappyeyeballs-2.4.3 aiohttp-3.10.10 aiosignal-1.3.1 async-timeout-4.0.3 cffi-1.17.1 einops-0.8.0 frozenlist-1.5.0 huggingface-hub-0.26.2 kornia-0.7.4 kornia-rs-0.1.7 multidict-6.1.0 packaging-24.2 propcache-0.2.0 psutil-6.1.0 pycparser-2.22 regex-2024.11.6 safetensors-0.4.5 scipy-1.14.1 sentencepiece-0.2.0 soundfile-0.12.1 spandrel-0.4.0 tokenizers-0.20.3 torchsde-0.2.6 tqdm-4.67.0 trampoline-0.1.2 transformers-4.46.2 yarl-1.17.1Switch to your user home directory.

console$ cd

Move the

ComfyUIproject directory to a system-wide location such as/opt.console$ sudo mv ComfyUI /opt

Set Up ComfyUI as a System Service

Follow the steps below to set up ComfyUI as a system service to automatically run and manage the application's processes on your server.

Create a new

comfyui.servicesystem service file.console$ sudo nano /etc/systemd/system/comfyui.service

Add the following configurations to the file. Replace

/opt/ComfyUIwith your actual ComfyUI project directory path.ini[Unit] Description=ComfyUI System Service After=network.target [Service] WorkingDirectory=/opt/ComfyUI ExecStart=python3 main.py [Install] WantedBy=multi-user.target

Save and close the file.

The above configuration creates a new

comfyuisystem service to manage the ComfyUI application processes on the server.Restart the systemd daemon to apply the new service changes.

console$ sudo systemctl daemon-reload

Enable the ComfyUI system service.

console$ sudo systemctl enable comfyui

Start the ComfyUI service.

console$ sudo systemctl start comfyui

View the ComfyUI service status and verify that it's active.

console$ sudo systemctl status comfyui

Configure Nginx as a Reverse Proxy to Access ComfyUI

ComfyUI listens for connection requests on the localhost port 8188 by default unless you set a specific IP address and port using the --listen option. Set up a reverse proxy such as Nginx to forward all incoming connection requests to the backend port 8188 to enable access to the ComfyUI interface. Follow the steps below to install and configure Nginx to forward HTTP requests to the ComfyUI interface using your comfyui.example.com domain.

Install Nginx.

console$ sudo apt install nginx -y

Create a new

comfyui.confNginx virtual host configuration in thesites-availabledirectory.console$ sudo nano /etc/nginx/sites-available/comfyui.conf

Add the following configurations to the file. Replace

comfyui.example.comwith your actual domain.nginxupstream backend { server 127.0.0.1:8188; } server { listen 80; listen [::]:80; server_name comfyui.example.com; proxy_set_header Host $host; proxy_http_version 1.1; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $scheme; proxy_set_header Sec-WebSocket-Extensions $http_sec_websocket_extensions; proxy_set_header Sec-WebSocket-Key $http_sec_websocket_key; proxy_set_header Sec-WebSocket-Version $http_sec_websocket_version; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "Upgrade"; location / { proxy_pass http://backend$request_uri; } }

Save and close the file.

Link the configuration file to the

sites-enableddirectory to activate it.console$ sudo ln -s /etc/nginx/sites-available/comfyui.conf /etc/nginx/sites-enabled/

Test the Nginx configuration for errors.

console$ sudo nginx -t

Output:

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok nginx: configuration file /etc/nginx/nginx.conf test is successfulRestart Nginx to apply the configuration changes.

console$ sudo systemctl restart nginx

Security

Uncomplicated Firewall (UFW) is active on Vultr Ubuntu servers by default. Follow the steps below to enable connections to the HTTP port 80, and the HTTPS port 443 through the firewall configuration to enable access to the ComfyUI interface.

View the UFW status to verify that the firewall is active on your server.

console$ sudo ufw status

Allow SSH connections on port

22and enable UFW if the firewall status isinactive.console$ sudo ufw allow 22/tcp && sudo ufw enable

Allow network connections to the HTTP port

80.console$ sudo ufw allow 80/tcp

Allow connections to the HTTPS port

443.console$ sudo ufw allow 443/tcp

Reload the UFW configuration to apply the firewall changes.

console$ sudo ufw reload

Secure ComfyUI with Let's Encrypt SSL Certificates

SSL certificates encrypt network connections between a user's web browser and the server. Generate SSL certificates using a trusted certificate authority such as Let's Encrypt to secure network connections to the server using HTTPS. Follow the steps to install the Certbot Let's Encrypt client and generate trusted SSL certificates to secure the ComfyUI interface.

Install the Certbot Let's Encrypt client tool using Snap.

console$ sudo snap install --classic certbot

Generate a new SSL certificate using your domain. Replace

comfyui.example.comanduser@example.comwith your actual domain and active email address respectively.console$ sudo certbot --nginx -d comfyui.example.com -m user@example.com --agree-tos

Test the Certbot automatic SSL certificate renewal process.

console$ sudo certbot renew --dry-run

Certbot auto-renews your SSL certificate every 90 days before expiry if the above command is successful.

Generate Images using ComfyUI

ComfyUI is actively running as a system service on your server and accessible using the comfyui.example.com domain defined in your Nginx configuration. Follow the steps below to set up the default nodes and generate images using ComfyUI.

Visit your domain using a web browser such as Chrome to access the ComfyUI interface.

https://comfyui.example.com

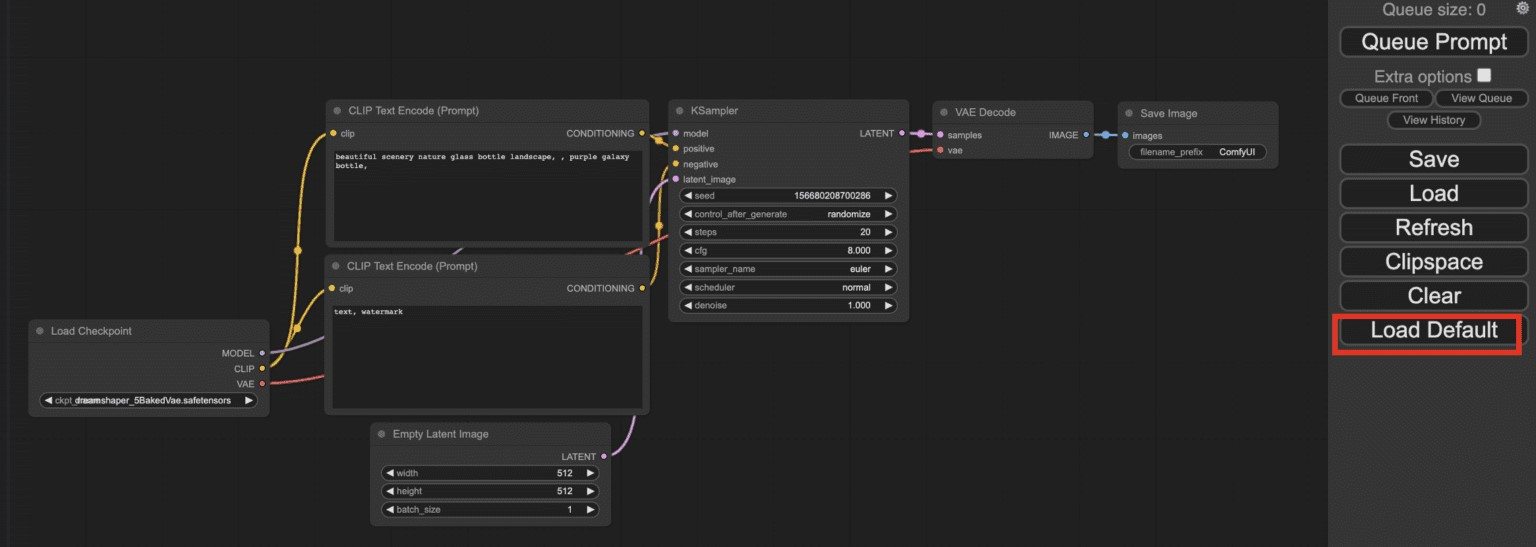

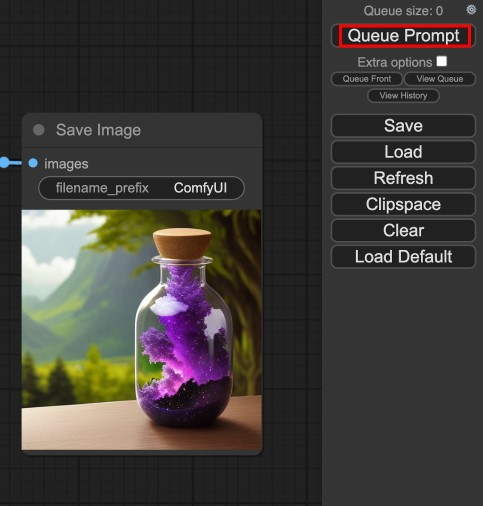

ComfyUI uses the text-to-image workflow by default. Click Load Default in the floating menu to switch to the default workflow in case of unintended changes.

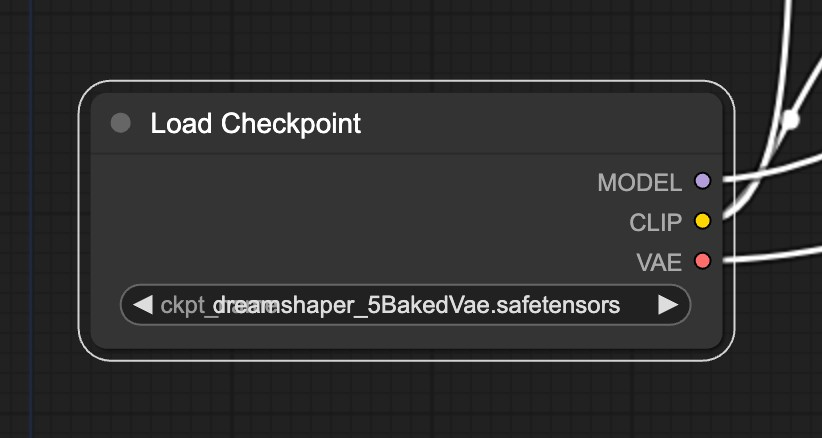

Click the Load Checkpoint node dropdown to select a diffusion model. For example, select the

Stable Diffusion XLmodel.

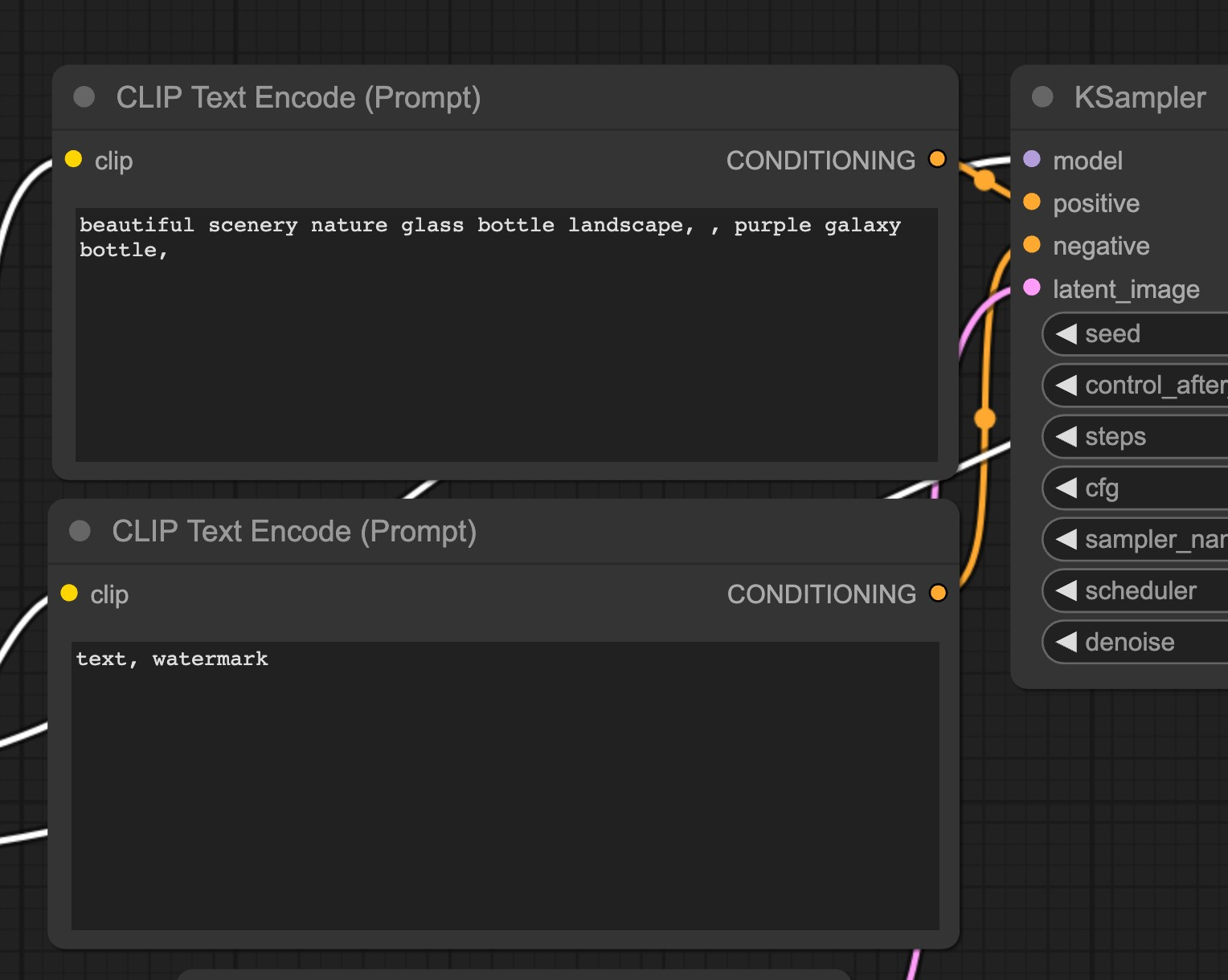

Navigate to the Prompt node, and enter a positive prompt such as

beautiful scenery nature glass bottle landscape, purple galaxy bottle,. Enter a negative prompt in the respective node such astext,watermark.

Click Queue Prompt on the main floating menu to generate a new image.

Wait for the image generation process to complete and view your generated image in the Save Image node.

Enable ComfyUI Manager

ComfyUI manager is an add-on tool that provides a user-friendly interface to install and manage custom nodes or models. Follow the steps below to install and enable the ComfyUI manager tool.

Switch to the ComfyUI project directory.

console$ cd /opt/ComfyUI

Stop the ComfyUI system service.

console$ sudo systemctl stop comfyui

Switch to the

custom_nodesdirectory.console$ cd custom_nodes

Clone the ComfyUI manager repository using Git.

console$ git clone https://github.com/ltdrdata/ComfyUI-Manager.git

Start the ComfyUI system service.

console$ sudo systemctl start comfyui

Access the ComfyUI interface in your web browser.

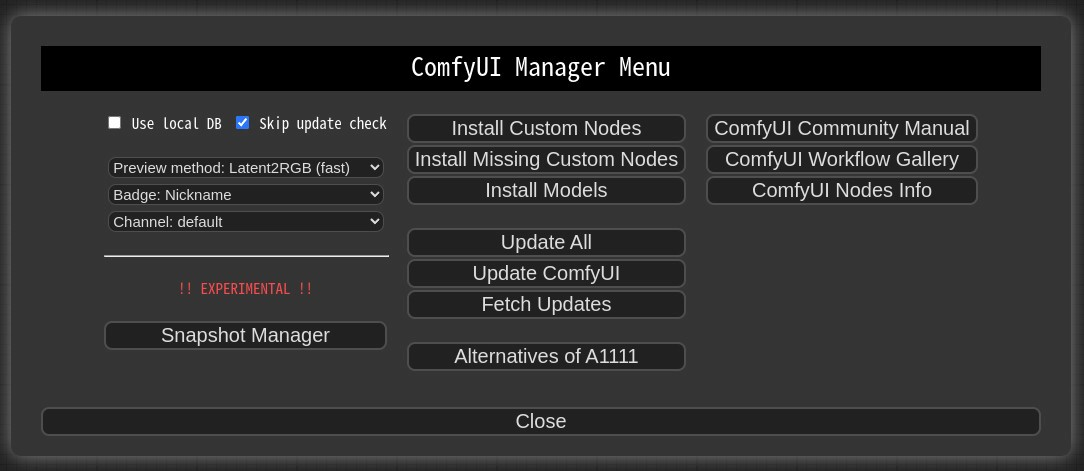

https://comfyui.example.comVerify and click the new

Manageroption on the floating menu.

Verify that the ComfyUI manager interface loads correctly.

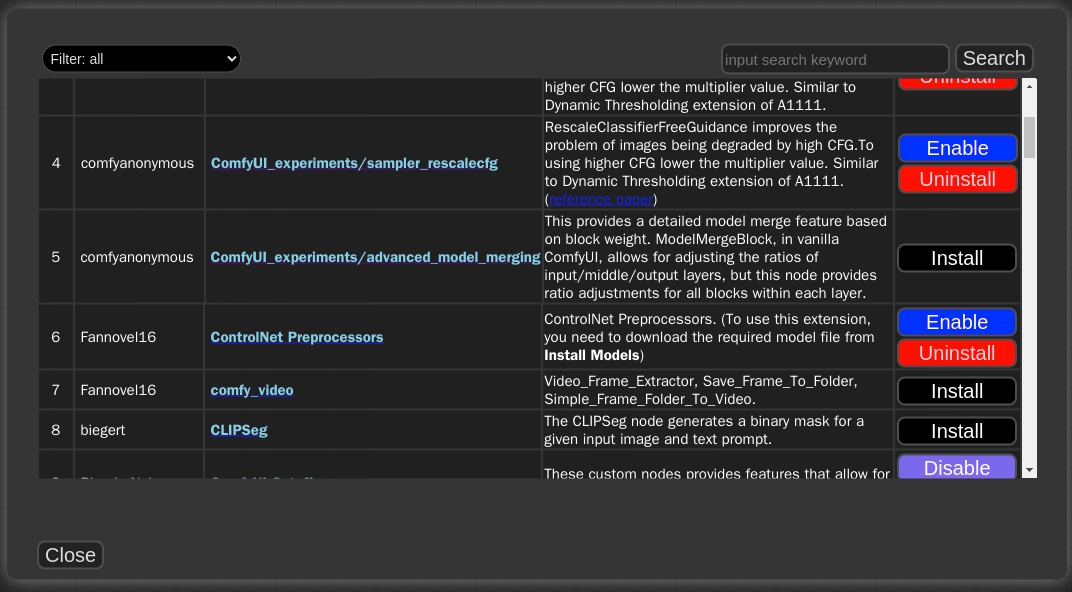

Click

Install Custom NodesorInstall Modelsto install additional nodes and models to enable in ComfyUI.

Common ComfyUI Nodes

ComfyUI nodes are modular units that form a workflow and perform specific image generation functionalities. Each ComfyUI node processes input data and passes the output to the next node for further processing in a workflow. This modular approach enables you to create diverse image generation workflows and test multiple configurations to generate high-quality results. The following are the most common node types you can use in your ComfyUI workflows.

- Input nodes: Export data to other nodes, such as images, text prompts, and random numbers.

- Processing nodes: Manipulate the data provided by input nodes, such as resizing images, applying filters, and generating prompts.

- Output nodes: Save results from the image generation process, such as saving images to disk or displaying them in a preview window.

- Load Checkpoint: Loads a pre-trained diffusion model in ComfyUI.

- CLIP Encode: Encodes text prompts into CLIP embeddings.

- Prompt Node: Provides text prompts to the model.

- Negative Prompt Node: Provides negative prompts to the model to guide the image generation process.

- VAE Encode: Converts images to latent representations.

- VAE Decode: Converts latent representations to images.

- Random Seed Node: Generates random seeds for Image generation samplers.

- Empty Latent Image Node: Creates a blank latent image of a specified size for use in other image generation nodes.

- Scale Node: Scales images to a desired size.

- Save image Node: Saves generated images to disk.

- Preview Node: Displays generated images in a preview window.

Conclusion

You have deployed ComfyUI on a Vultr Cloud GPU server and generated images using the ComfyUI web interface. In addition, you installed the ComfyUI Manager to enable new nodes and models. For more information and configuration options, please visit the ComfyUI community documentation.