Introduction

Stable Code 3B by Stability AI is a 3 billion parameter model capable of performing on devices with hardware limitations, Its performance is comparable to counterparts double the size. It also has fill-in-the-middle capability and is trained in 18 programming languages.

StableCode Instruct Alpha 3B and StableCode Completion Alpha 3B by Stability AI are two more coding LLMs that can perform inference workloads on edge devices. The former can generate code according to the instructions provided and later has the capability of code completion. All three models are under non commercial license that means you cannot use these models for any other purposes than research without enrolling for StabilityAI membership.

In this article, you are to perform inference workloads on the 3 models mentioned above, and compare their tokens per second generating capability and their VRAM consumptions respectively.

Prerequisites

- Deploy a fresh Ubuntu 22.04 A100 Vultr Cloud GPU server with at least

80 GBGPU RAM. - Securely access the server using SSH as a non-root sudo user.

- Update the server.

- Install JupyterLab and PyTorch.

Stable Code 3B Inference

In the section, you are to perform inference workload on the Stablecode 3B model by installing the required dependencies, initializing the model and tokenizer, providing a response to the model for code completion, and calculating tokens per second generated by the model.

You can follow along with the demonstration by using the commands given in the Stable-code-3B Jupyter Notebook.

Install the dependency packages.

console$ pip install tiktoken transformers accelerate

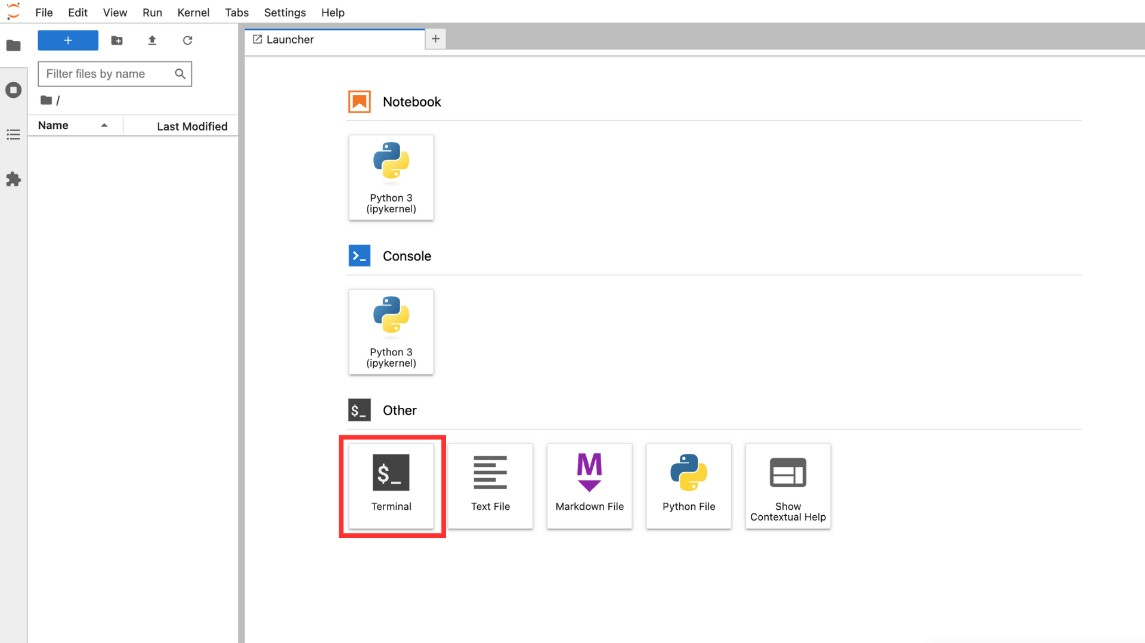

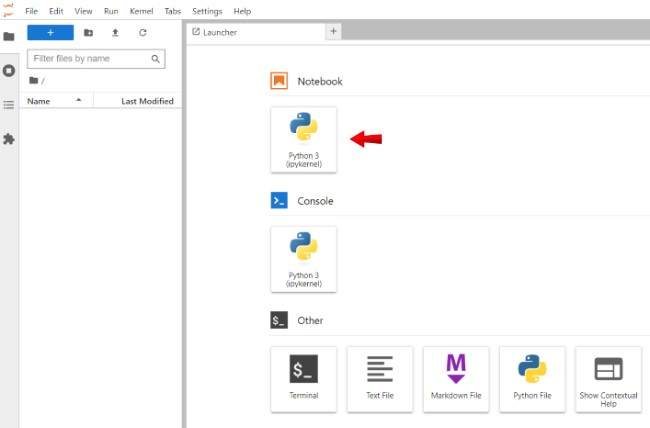

Open a terminal within the Jupyter lab interface.

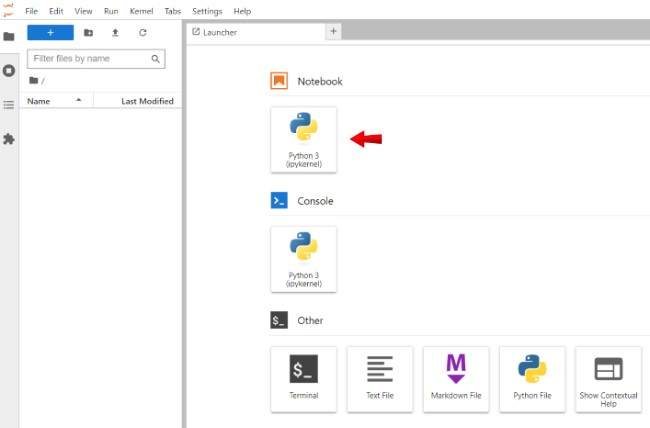

Open a new Notebook session, and set its name to

Stable-code-3b.

Initialize the model and tokenizer.

pythonimport torch from transformers import AutoModelForCausalLM, AutoTokenizer import time tokenizer = AutoTokenizer.from_pretrained("stabilityai/stable-code-3b", trust_remote_code=True) model = AutoModelForCausalLM.from_pretrained( "stabilityai/stable-code-3b", trust_remote_code=True, torch_dtype="auto", ) model.cuda()

In the above code block, you are initializing the

stable-code-3bmodel with its tokenizer using the HuggingFace pipeline.Give input and tokenize the input.

pythoninput_text = "import torch\nimport torch.nn as nn" inputs = tokenizer(input_text, return_tensors="pt").to(model.device)

The model will try to complete the prompt provided above in the code block.

Define model parameters and initialize time calculation.

pythonstart_time = time.time() tokens = model.generate( **inputs, max_new_tokens=48, temperature=0.2, do_sample=True, ) end_time = time.time()

In the above code block, you are initializing time calculation functions that will calculate tokens per second, and you are initializing model parameters that can be modified to change model response.

Print generated text.

pythongenerated_text = tokenizer.decode(tokens[0], skip_special_tokens=True) print(generated_text)

Calculate and print tokens per second

pythonnum_tokens_generated = len(tokens[0]) execution_time = end_time - start_time tokens_per_second = num_tokens_generated / execution_time print(f"Tokens per second: {tokens_per_second:.2f}")

In the above code block, you are calculating tokens per second generated by the model.

Stablecode 3B generates an average of 35 tokens per second.

Access HuggingFace Hub and Gated Repositories

StableCode Instruct Alpha 3B and StableCode Completion Alpha 3B both are gated models so to perform inference workloads you need to have access to their repositories.

Visit the settings menu of your HuggingFace account.

Select Access tokens from the left-hand side of the menu.

Click New token and type in a name for the token.

Click Generate a token, and copy and paste the token into your clipboard.

In your existing collab notebook, install the required dependency.

python!pip install --upgrade huggingface-hub

Gain access to the models

pythonfrom huggingface_hub import login login(token="YOUR_HF_TOKEN")

Please make sure to replace the token field with the actual value in your clipboard, not doing so will result in authentication errors in the steps performed further.

Stable Code 3B Instruct Alpha Inference

In the section, you are to perform inference workload on the Stablecode Instruct Alpha 3B model by installing the required dependencies, initializing the model and tokenizer, providing a response to the model to generate code according to the instruction provided, and calculating tokens per second generated by the model.

You can follow along with the demonstration by using the commands given in the Stablecode-instruct-alpha-3B Jupyter Notebook.

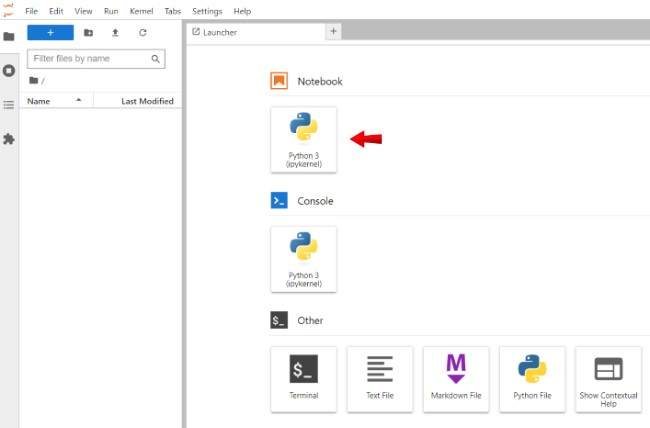

Open a new Notebook and set its name to

Stablecode-instruct-alpha-3b.

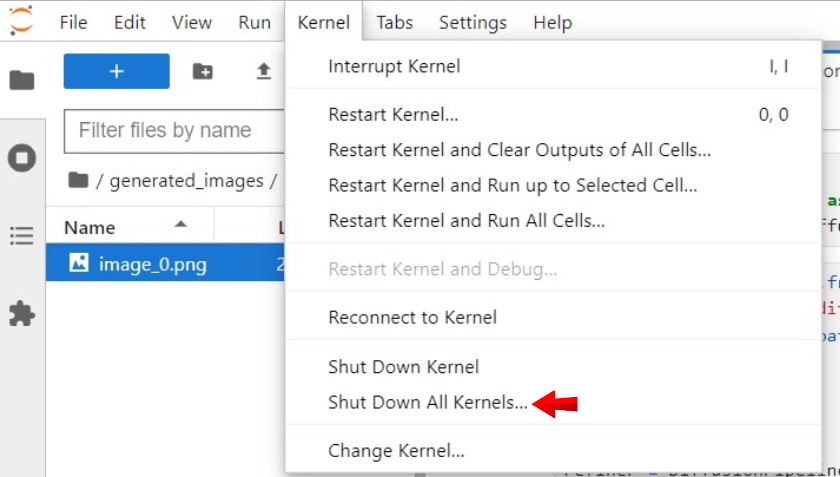

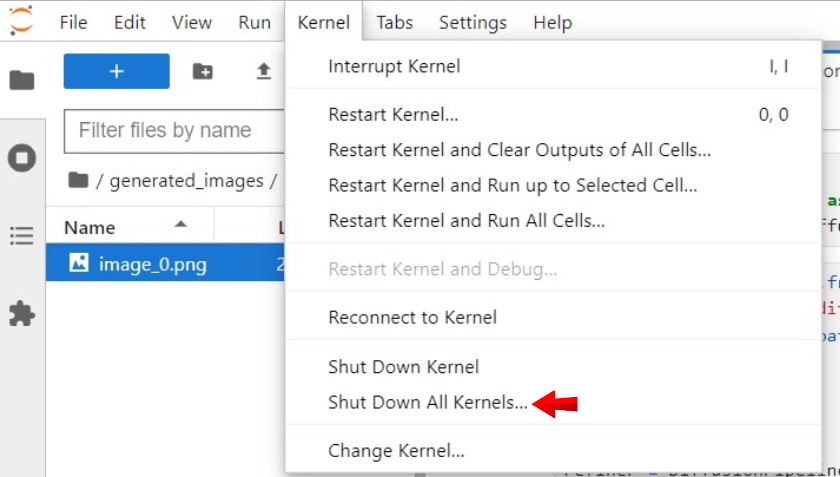

Navigate to the Kernel menu option in your Jupyter Notebook, and click Shutdown Down All Kernels to clear GPU memory.

Initialize the model and tokenizer.

pythonfrom transformers import AutoModelForCausalLM, AutoTokenizer import time tokenizer = AutoTokenizer.from_pretrained("stabilityai/stablecode-instruct-alpha-3b") model = AutoModelForCausalLM.from_pretrained( "stabilityai/stablecode-instruct-alpha-3b", trust_remote_code=True, torch_dtype="auto", ) model.cuda()

In the above code block, you are initializing the

stablecode-instruct-alpha-3bmodel along with its tokenizer.Give input and tokenize the input.

pythoninstruction = "###Instruction\nGenerate a python function to find number of CPU cores###Response\n" inputs = tokenizer(instruction, return_tensors="pt", return_token_type_ids=False).to("cuda")

The model will try to generate an output according to the instructions provided.

Define model parameters and initialize time calculation.

pythonstart_time = time.time() tokens = model.generate( **inputs, max_new_tokens=48, temperature=0.2, do_sample=True, ) end_time = time.time()

Print generated response.

pythongenerated_code = tokenizer.decode(tokens[0], skip_special_tokens=True) print(generated_code)

Calculate and print tokens per second.

pythonnum_tokens_generated = len(tokens[0]) execution_time = end_time - start_time tokens_per_second = num_tokens_generated / execution_time print(f"Tokens per second: {tokens_per_second:.2f}")

The tokens per second generated by the StableCode Instruct Alpha 3B model is an average of 30 tokens per second.

Stable Code 3B Completion Alpha Inference

In the section, you are to perform inference workload on the StableCode Completion Alpha 3B model by installing the required dependencies, initializing the model and tokenizer, providing a response to the model for code completion, and calculating tokens per second generated by the model.

You can follow along with the demonstration by using the commands given in the Stablecode-completion-alpha-3B Jupyter Notebook.

Open a new Notebook and set its name to

Stablecode-completion-alpha-3b.

Navigate to the Kernel menu option in your Jupyter Notebook, and click Shutdown Down All Kernels to clear GPU memory.

Initialize the model and tokenizer.

pythonimport torch from transformers import AutoModelForCausalLM, AutoTokenizer import time tokenizer = AutoTokenizer.from_pretrained("stabilityai/stablecode-completion-alpha-3b") model = AutoModelForCausalLM.from_pretrained( "stabilityai/stablecode-completion-alpha-3b", trust_remote_code=True, torch_dtype="auto", ) model.cuda()

Provide a prompt and tokenize it.

pythoninputs = tokenizer("import torch\nimport torch.nn as nn", return_tensors="pt", return_token_type_ids=False).to("cuda")

Initialize model parameters and time functions.

pythonstart_time = time.time() tokens = model.generate( **inputs, max_new_tokens=48, temperature=0.2, do_sample=True, ) end_time = time.time()

Calculate and measure tokens per second.

pythonnum_tokens = tokens.size(1) elapsed_time = end_time - start_time tokens_per_second = num_tokens / elapsed_time print("Generated text:", tokenizer.decode(tokens[0], skip_special_tokens=True)) print(f"Tokens per second: {tokens_per_second:.2f}")

StableCode Completion Alpha 3B generates an average of 25 tokens per second

GPU Usage and VRAM Requirements

Following are the VRAM consumptions of both the models demonstrated in this article:

- Stable Code 3B: 6.4 GB

- StableCode Instruct Alpha 3B: 14.7 GB

- StableCode Completion Alpha 3B: 6.4 GB

Conclusions

In this article, you performed inference workloads on Stable Code 3B, StableCode Instruct Alpha 3B, and StableCode Completion Alpha 3B and compared their tokens per second generating capability and their VRAM consumptions respectively.