Introduction

StableLM 2 1.6B is a text-completion small language model by StabilityAI with 1.6 billion parameters by StabilityAI, the model is trained on multilingual data. Its compact size makes it feasible to infer the model with limited hardware resources. Similarly, StableLM 2 Zephyr 1.6B, a model by StabilityAI with 1.6 billion parameters is trained mixed public datasets, the Zephyr in the name is included because it is a fine-tuned model that accepts inputs including roles like system, user, and assistant, and has a custom tokenizer. Both the models are under non commercial license that means you cannot use these models for any other purposes than research without enrolling for StabilityAI membership.

In this article, you are to perform inference workload on the StableLM 2 1.6B and StableLM 2 Zephyr 1.6B models using the Vultr Cloud GPU and compare the results of tokens per second produced by each model and VRAM consumption by both models.

Prerequisites

Before you begin:

- Deploy a fresh Ubuntu 22.04 A100 Vultr Cloud GPU server with at least

80 GBGPU RAM. - Securely access the server using SSH as a non-root sudo user.

- Update the server.

- Install JupyterLab and PyTorch.

StableLM 2 1.6B Inference

In this section, you are to install all the dependency packages, initialize the StableLM 2 1.6B model and tokenizer, provide input, and calculate the time taken by the model to generate tokens per second.

You can follow along with the demonstration by using the commands given in the Stablelm-2-1_6b Jupyter Notebook.

Install the dependency packages.

console$ pip install tiktoken transformers accelerate

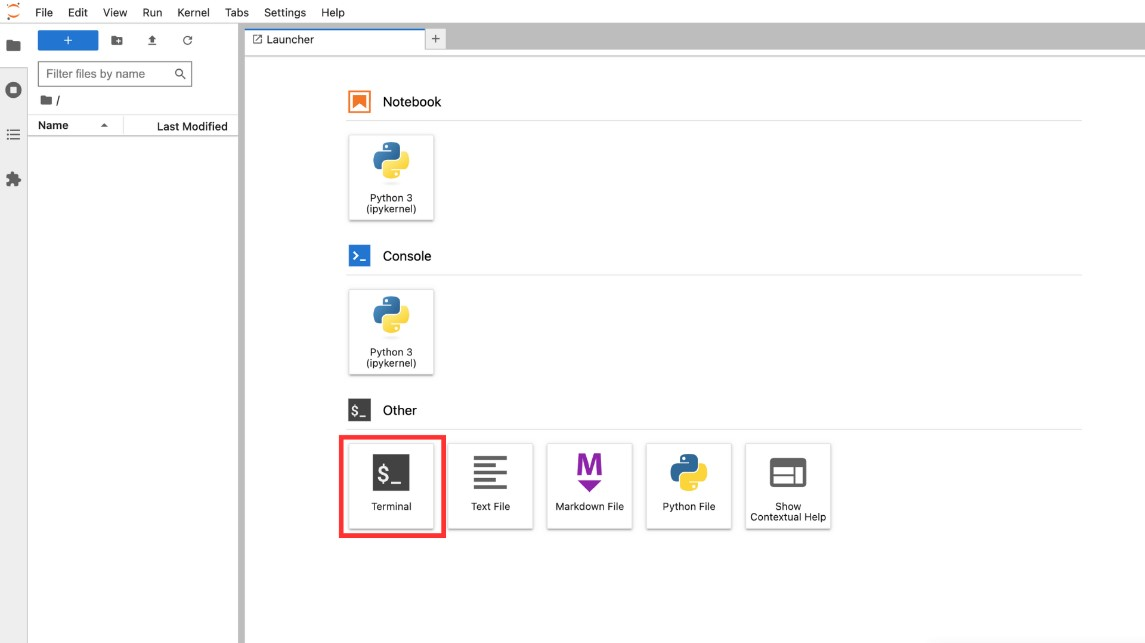

Open a terminal within the Jupyter lab interface.

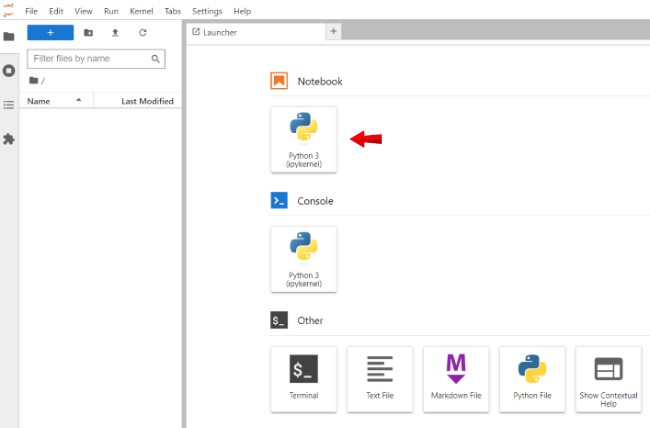

Open a new Notebook session, and set its name to

Stablelm-2-1_6b.

Declare the model and tokenizer.

pythonimport time from transformers import AutoModelForCausalLM, AutoTokenizer tokenizer = AutoTokenizer.from_pretrained("stabilityai/stablelm-2-1_6b", trust_remote_code=True) model = AutoModelForCausalLM.from_pretrained( "stabilityai/stablelm-2-1_6b", trust_remote_code=True, torch_dtype="auto", ) model.cuda()

In the above code blocks, you are initializing the

stablelm-2-1_6b modeland its tokenizer using Hugginface pipeline.Provide an input text and tokenize the input.

pythoninput_text = "Cloud computing has revolutionized tech" inputs = tokenizer(input_text, return_tensors="pt").to(model.device)

Define model parameters.

pythonstart_time = time.time() tokens = model.generate( **inputs, max_new_tokens=64, temperature=0.70, top_p=0.95, do_sample=True, ) end_time = time.time()

In the above code blocks, you are declaring all the model parameters to modify the response generation and declaring the time functions to calculate the tokens per second.

Calculate time taken.

pythontime_taken = end_time - start_time num_tokens_generated = tokens.shape[1] tokens_per_second = num_tokens_generated / time_taken

Print the output and time taken.

pythondecoded_text = tokenizer.decode(tokens[0], skip_special_tokens=True) print(f"Generated Text: {decoded_text}") print(f"Tokens Per Second: {tokens_per_second}")

The above code blocks printing the response and time taken by the model to generate tokens per second using the time calculation script declared in the previous commands.

The StableLM2 1.6B model produces an average of 30 tokens per second.

StableLM 2 Zephyr 1.6B Inference

In this section, you are to initialize the StableLM 2 Zephyr 1.6B model and tokenizer, provide input with a prompt template in a chatbot format, and calculate the time taken by the model to generate tokens per second.

You can follow along with the demonstration by using the commands given in the Stablelm-2-zephyr-1_6b Jupyter Notebook.

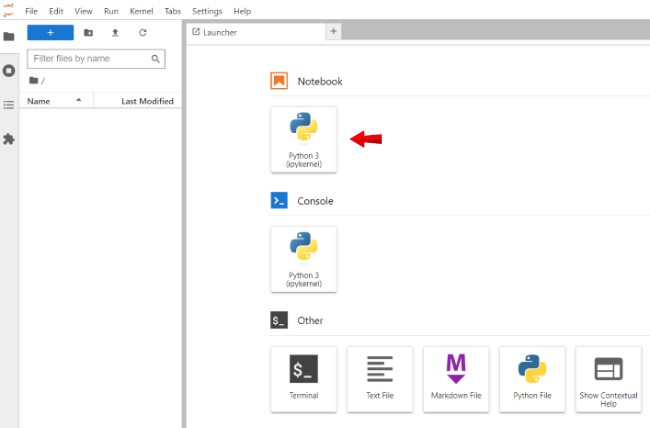

Open a new Notebook and set its name to

Stablelm-2-zephyr-1_6b.

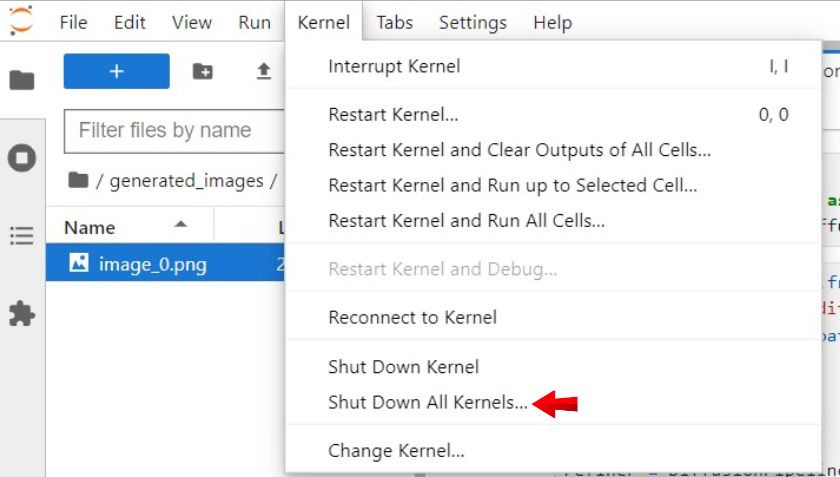

Navigate to the Kernel menu option in your Jupyter Notebook, and click Shutdown Down All Kernels to clear GPU memory.

Declare the model and tokenizer.

pythonfrom transformers import AutoModelForCausalLM, AutoTokenizer tokenizer = AutoTokenizer.from_pretrained('stabilityai/stablelm-2-zephyr-1_6b', trust_remote_code=True) model = AutoModelForCausalLM.from_pretrained( 'stabilityai/stablelm-2-zephyr-1_6b', trust_remote_code=True, device_map="auto" )

In the above code blocks, you are initializing the

stablelm-2-zephyr-1_6bmodel and its tokenizer using the Huggingface pipeline.Provide an input text and tokenize the input.

pythonimport time prompt = [{'role': 'user', 'content': 'Which famous math number begins with 1.6 ...?'}] inputs = tokenizer.apply_chat_template( prompt, add_generation_prompt=True, return_tensors='pt' )

In the above code blocks, you are providing a prompt to the chatbot in role role-defined format specifically the finetuned Zephyr flavor of the model.

Define model parameters.

pythonstart_time = time.time() tokens = model.generate( inputs.to(model.device), max_new_tokens=1024, temperature=0.5, do_sample=True ) end_time = time.time()

In the above code blocks, you are declaring all the model parameters to modify the response generation and declaring the time functions to calculate the tokens per second.

Print generated text and calculate tokens per second.

pythongenerated_text = tokenizer.decode(tokens[0], skip_special_tokens=False) print(generated_text) num_tokens_generated = len(tokens[0]) execution_time = end_time - start_time tokens_per_second = num_tokens_generated / execution_time print(f"Tokens per second: {tokens_per_second:.2f}")

In the above code blocks, you are printing the generated response, calculating and printing tokens per second generated by the model.

The tokens per second generated by this model are an average of 20 to 30 tokens per second.

GPU Usage and VRAM Requirements

Following are the VRAM consumptions of both the models demonstrated in this article:

- StableLM2 1.6B: 4.04 GB

- StableLM2 Zephyr 1.6B: 8.4GB

Conclusion

It's important to remember that both the models are small in size, the models can hallucinate easily resulting in undesired responses. The purpose of these models is to use them on systems with fewer hardware resources and edge devices.

In this article, you inferred the StableLM2 1.6B and StableLM2 Zephyr 1.6B models by preparing the environment and using HuggingFace pipelines. You compared both models based on their ability to generate tokens per second and their VRAM consumption.