Introduction

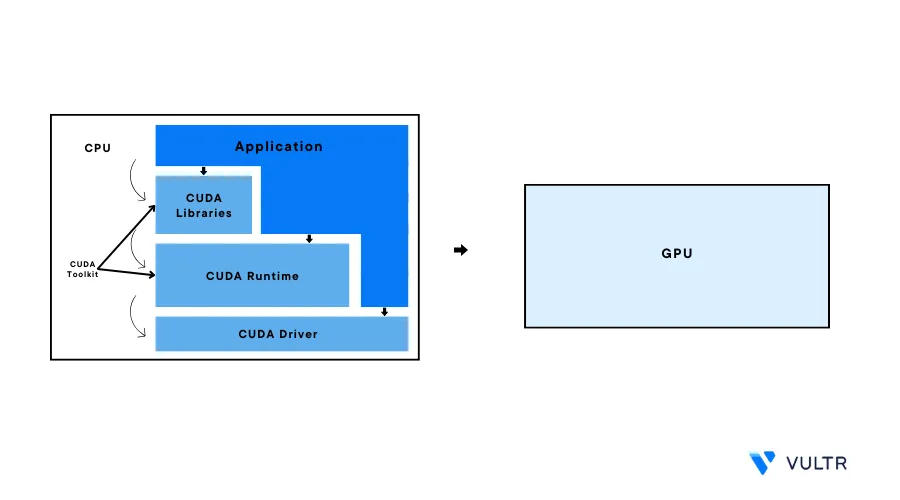

Compute Unified Device Architecture (CUDA) is a parallel computing platform developed by NVIDIA to leverage the power of Graphical Processing Units (GPUs) to complete computing tasks. CUDA consists of three core elements CUDA driver, toolkit, and libraries that allow users to take full advantage of NVIDIA GPUs and perform tasks that are not practically supported by CPUs or require a lot of time to execute.

This article explains the various advantages of CUDA with NVIDIA GPUs, the role of CUDA drivers, toolkit, libraries along with a differentiation of the three core elements and driver version matching.

Understanding the NVIDIA CUDA Driver

The CUDA driver acts as a bridge between your system's CPU and GPU using data instructions and various computations to interconnect both units for task completion. It allows the data to transfer efficiently, and also orchestrates the synchronization of parallel computing tasks.

CUDA Driver Responsibilities

- Synchronization: To avoid conflicts between CPU and GPU, the driver facilitates efficient synchronization between units to ensure that tasks execute and coherent resources get shared in an orderly manner

- Kernel Execution: To leverage the maximum potential of GPUs, the driver coordinates the execution of GPU kernels which are the parallel units used for computations. It manages the distribution of these kernels for the GPU threads which utilizes the parallel architecture ability of the GPU

- Memory Management: The driver oversees the memory allocation and de-allocation to manage the data flow between the CPU and GPU ensuring data availability for process-intensive tasks

Understanding the CUDA Toolkit

CUDA Toolkit provides users with a comprehensive suite of tools, libraries, and utilities to maximize the potential of NVIDIA GPUs. It abstracts the hardware complexities and helps users create high-performance GPU-powered applications.

Components of the CUDA Toolkit

- CUDA Compiler: Also known as NVIDIA CUDA Compiler (NVCC). it helps users incorporate GPU-specific extensions in C/C++ code to allow the code execute on GPUs. The compiler turns CUDA code into machine code executable on GPUs

- Runtime API: A collection of functions that streamline device management, memory handling, and, thread management, it also makes GPU parallelism accessible to users

- Driver API: It's the lower-level counterpart that gives more control to hardware management and execution of tasks. It enables memory allocation and kernel launch

- NVIDIA Nsight Tools: A collection of debugging and profiling tools specific to development on GPU. It helps users solve performance issues and perform memory optimization

Understanding CUDA Libraries

CUDA Libraries is a collection of pre-built functions that allow a user to leverage the power of a GPU. Working with GPUs comes with many complicated processes, and these libraries help users to side-step these complicated processes and focus on priority processes. This enables faster development and innovation together with abstracting away the intricacies leading to wide adoption among users.

Essential CUDA Libraries

Below are commonly used CUDA libraries applied for a variety of computations:

cuBLAS: CUDA Basic Linear Algebra Subprograms optimizes the processes involving linear algebra, matrix multiplication, vector operations, and decomposition. It delivers performance-enhanced results for scientific and engineering computations.cuDNN: CUDA Deep Neural Network provides optimized primitives for neural network tasks, it accelerates convolutional layers and recurrent layers promoting rapid training, deployment, and fine-tuning of neural networks.cuFFT: CUDA Fast Fourier Transform signals processing and computing Fast Fourier Transforms. It also manipulates signal and image data important for spectral analysis and image filtering taskscuSPARSE: CUDA Sparse Matrix Library handles sparse matrix operations, and efficient implementations of the sparse matrix-vector and matrix-matrix operations. It's also critical for simulations, optimization, and graph algorithmscuRAND: CUDA Random Number Generation generates random number generators on a GPU, and also includes facilitates simulations, cryptography, and statistical analysisCUDPP: CUDA Data Parallel Primitives Library provides data parallel primitives, and essential parts for various algorithms like sorting, scanning, and reductioncuSOLVER: CUDA Solver Library handles scientific simulations and engineering tasks involving solving large systems for equations. It also provides GPU-accelerated solvers for dense and sparse linear systems.

Benefits of CUDA Libraries

- Performance Boost: All the CUDA Libraries are specifically optimized for GPU architecture which leads to significant performance gains for various types of computations that provides fast processing of data-heavy processes.

- User Efficiency: Domain-specific pre-built functions remove the need for writing complex code with built-in high-level logic and optimization principles to save users time

Difference between CUDA Driver, CUDA Toolkit and CUDA Libraries

It's important to understand the fundamental differences between various CUDA elements to use them to your advantage, below are some core differences between the 3 elements.

Focus and Responsibility

- CUDA Driver manages the low-level GPU interactions, kernel execution, and synchronization

- CUDA Toolkit is the development ecosystem providing tools, APIs, and libraries

- CUDA Libraries are functions for targeted computations and streamlining development

Abstraction Levels

- CUDA Driver operates at the hardware level, manages data, and task coordination between CPU and GPU

- CUDA Toolkit abstracts away the interactions via Runtime API and Driver API increasing flexibility

- CUDA Libraries offer the highest level of abstraction using pre-built functions

User Interaction:

- CUDA Driver employs API-based GPU management commands for users

- CUDA Toolkit offers varied programming approaches to users with high or low-level APIs

- CUDA Libraries enable users to leverage pre-built functions to maximize the result

CUDA Toolkit and Driver Compatibility

With the release of CUDA 11, CUDA toolkit and CUDA Driver have different version numbers. Applications built with CUDA Toolkit can run a limited number of features if the minimum required driver version is available. The minimum required driver version can be different from the driver given with the CUDA Toolkit but they should belong to the same major release. The CUDA Driver is backward compatible which means that an application compiled with an older CUDA Toolkit version can work with the latest driver releases.

Conclusion

In this article, you discovered the fundamental concepts of CUDA and the 3 CUDA core elements CUDA Driver, CUDA Toolkit, and CUDA libraries. A user needs to understand the difference between these elements to use every tool to maximize efficiency and why it's necessary to choose the appropriate CUDA Toolkit and Driver versions.